High-Dimensional Variance

A useful view of a covariance matrix is that it is a natural generalization of variance to higher dimensions. I explore this idea.

When is a random scalar, we call the variance of , and define it as the average squared distance from the mean:

The mean is a measure of the central tendency of , while the variance is a measure of the dispersion about this mean. Both metrics are moments of the distribution.

However, when is a random vector or an ordered list of random scalars

then, at least in my experience, people do not talk about the variance of ; rather, they talk about the covariance matrix of , denoted :

Now Equations and look quite similar, and I think it is natural to eventually ask: is a covariance matrix just a high- or multi-dimensional variance of ? Does this notation and nomenclature make sense?

Of course, this is not my idea, but this was not how I was taught to think about covariance matrices. But I think it is an illuminating connection. So let’s explore this idea that a covariance matrix is just high-dimensional variance.

Definitions

To start, it is clear from definitions that a covariance matrix is related variance. We can write the outer product in Equation explicitly as:

Clearly the diagonal elements in are the variances of the scalars . So still captures the dispersion of each .

But what are the cross-terms? These are the covariances of the pairwise combinations of and , defined as

An obvious observation is that Equation is a generalization of Equation . Thus, variance () is simply a special case of covariance:

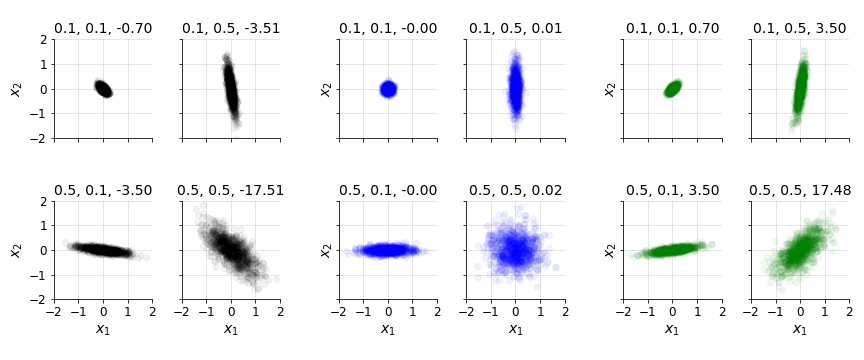

Furthermore, we can see that is not just a function of the univariate variance of each random variable; it is also a function of whether the two variances are correlated with the other. As a simple thought experiment, imagine that and both had high variances but were uncorrelated with each other, meaning that there was no relationship between large (small) values of and large (small) values of . Then we would still expct to be small (Figure ).

This is the intuitive reason that can be written in terms of the Pearson correlation coefficient :

Again, this generalizes Equation but with .

Using the notation from Equations we can rewrite the covariance matrix as

And with a little algebra, we can decompose the matrix in Equation into a form that looks strikingly like a multi-dimensional Equation :

The middle matrix is correlation matrix, which captures the Pearson (linear) correlation between all the variables in . So a covariance matrix of captures both the dispersion of each and how it covaries with the other random variables. If we think of scalar variance as a univariate covariance matrix, then one-dimensional case can be written as

Equation is not useful from a practical point of view, but in my mind, it underscores the view that univariate variance is a special case of covariance (matrices).

Finally, note that Equation gives us a useful way to compute the correlation matrix from the covariance matrix . It is

This is because the inverse of a diagonal matrix is simply the reciprocal of each element along the diagonal.

Properties

Some important properties of univariate variance have multidimensional analogs. For example, let be a non-random number. Recall that the variance of scales with or that

And in the general case, is a non-random matrix and

So both and are quadratic with respect to multiplicative constants!

As a second example, recall that the univariate variance of is just the variance of :

This is intuitive. A constant shift in the distribution does not change its dispersion. And again, in the general case, a constant shift in a multivariate distribution does not change its dispersion:

Finally, a standard decomposition of variance is to write it as

And this standard decomposition has a multi-dimensional analog:

Anyway, my point is not to provide detailed or comprehensive proofs, but only to underscore that covariance matrices have properties that indicate they are simply high-dimensional (co)variances.

Non-negativity

Another neat connection is that covariance matrices are positive semi-definite (PSD), which I’ll denote with

while univariate variances are non-negative numbers:

In this view, the Cholesky decomposition of a PSD matrix is simply a high-dimensional square root! So the Cholesky factor can be viewed as the high-dimensional standard deviation of , since

Here, I am wildly abusing notation to use to mean “analogous to”.

Precision and whitening

The precision of a scalar random variable is the reciprocal of its variance:

Hopefully the name is somewhat intuitive. When a random variable has high precision, this means it has low variance and thus a smaller range of possible outcomes. A common place that precision arises is when whitening data, or standardizing it to have zero mean and unit variance. We can do this by subtracting the mean of and then dividing it by its standard deviation:

This is sometimes referred to as z-scoring.

What’s the multivariate analog to this? We can define the precision matrix as the inverse of the covariance matrix or

Given the Cholesky decomposition in Equation above, we can compute the Cholesky factor of the precision matrix as:

So the multivariate analog to Equation is

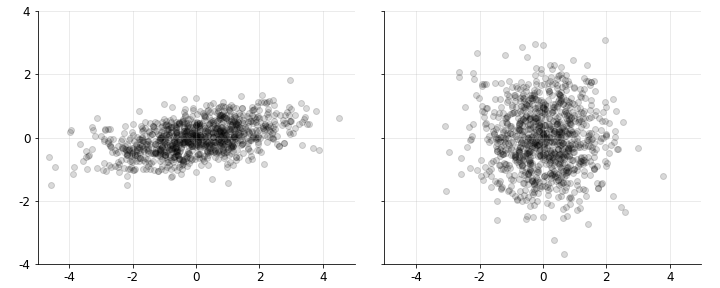

The geometric or visual effect of this operation is to apply a linear transformation (rotation) of our data (samples of the random vector) with covariance matrix into a new set of variables with an identity covariance matrix (Figure ).

Ignoring the mean, we can easily verify this transformation works:

This derivation is a little more tedious with a mean, but hopefully the idea is clear. Why the Cholesky decomposition actually works is a deeper idea, one worth its own post, but I think the essential ideas are also captured in principal components analysis (PCA).

Summary statistics

It would be useful to summarize the information in a covariance matrix with a single number. To my knowledge, there are at least two such summary statistics that capture different types of information.

Total variance. The total variance of a random vector is the trace of its covariance matrix or

We can see that total variance is a scalar that summarizes the variance across the components of . This concept is used in PCA, where total variance is preserved across the transformation. Of course, in the one-dimensional case, total variance is simply variance, .

Generalized variance. The generalized variance (Wilks, 1932) of a random vector is the determinant of its covariance matrix or

There are nice geometric interpretations of the determinant, but perhaps the simplest way to think about it here is that the determinant is equal to the product of the eigenvalues of or

So we can think of generalized variance as capturing the magnitude of the linear transformation represented by .

Generalized variance is quite different from total variance. For example, consider the two-dimensional covariance matrix implied by the following values:

Clearly, the total variance is two, while the determinant is one. Now imagine that the variables are highly correlated, that . Then the total variance is still two, but the determinant is now smaller, as the matrix becomes “more singular” (it is roughly ). So total variance, as its name suggests, really just summarizes the dispersion in , while generalized variance also captures how the variables in covary. When the variables in are highly (un-) correlated, generalized variance will be low (high).

Examples

Let’s end with two illuminating examples that use the ideas in this post.

Multivariate normal. First, recall that the probability density function (PDF) for a standard normal random variable is

We can immediately see that the squared term is just the square of Equation . Now armed with the interpretation that a covariance matrix is high-dimensional variance, consider the PDF for a multivariate normal random variable:

We can see that the Mahalanobis distance is a multivariate whitening. And the variance is the normalizing term,

has a multivariate analog that is generalized variance above.

Correlated random variables. Consider a scalar random variable with unit variance, . We can transform this into a random variable with variance by multiplying by :

What is the multi-dimensional version of this? If we have two random variables

how can we transform them into a random variable with covariance matrix ? Clearly, we multiply by the Cholesky factor, a la Equation .

What this suggests, however, is a generic algorithm for generating correlated random variables: we multiply by the Cholesky factor of the covariance matrix when . In the two-by-two case, that’s

This suggests that an algorithm: draw two i.i.d. random variables and , both with unit variance. Then set

Of course, this is nice because it can be vectorized and extends to an arbitrary number of random variables. And we can easily account for non-unit variances if we would like.

Conclusion

With the proper framing, it is fairly natural to think of as simply variance and to denote it as . This framing is useful because makes certain properties of covariance matrices almost obvious, such as why they are positive semi-definiteness or why their inverses appear when whitening data. However, high-dimensional variance has properties that are not important in one-dimension, such as the correlation between the variables in . Thus in my mind, the best framing is that univariate variance is really a special case of covariance matrices. However, in my mind, either reframing is useful for gaining a deeper intuition for the material.

- Wilks, S. S. (1932). Certain generalizations in the analysis of variance. Biometrika, 471–494.