Multicollinearity

Multicollinearity is when two or more predictors are linearly dependent. This can impact the interpretability of a linear model's estimated coefficients. I discuss this phenomenon in detail.

Linearly dependent predictors

In my first post on linear models, we derived the normal equation for linear regression or ordinary least squares (OLS). Let be an -vector of targets, and let be an design matrix of predictors. Then the normal equation for the OLS coefficients is

Note that estimating depends on the Gram matrix being invertible. Furthermore, the variance of this estimator,

also depends on being invertible. Therefore, a key question for OLS is: when is invertible? is invertible if and only if is full rank, and is full rank when none of its columns can be represented as linear combinations of any other columns. See A1 for a proof of this claim. (Notice that this immediately implies that , since the columns of cannot be linearly independent if their size is less than . See my discussion of the linear–dimension inequality in my post on linearly dependence if needed. Thus, OLS requires that there be as many observations as predictors.)

If the predictors of are linearly dependent, then we say they are multicollinear, and we call this phenomenon exact multicollinearity. However, in practice, our predictors rarely exhibit exact multicollinearity, as this would mean two predictors are effectively the same. However, multicollinearity can still be a problem without a perfect linear relationship between predictors. First, models can be difficult to interpret since multicollinear predictors can be predictive of the same response. Second, the matrix inversion of may be impossible at worst and unstable at best. If the inversion is unstable, the estimated can change dramatically given a small change in the input.

Let’s discuss both problems in turn.

Uninterpretible features

The first problem with multicollinearity is that it makes it difficult to disambiguate between the effect of two or more multicollinear predictors. Imagine, for example, that we use a linear model to predict housing prices and that two predictors, and , both measure the size of the house. One is the area in square feet, and the other is the area in square meters. Since and are collinear, then for some constant and therefore:

Here, there is no unique solution to the normal equation (recall there are such equations and ). For any choice of , there is an infinite number of choices for through the equation . Given this fact, it is difficult to properly interpret and since, in principal, they can be any value. This also has downstream effects. If the variance of is high, then it will be harder to reject the null hypothesis.

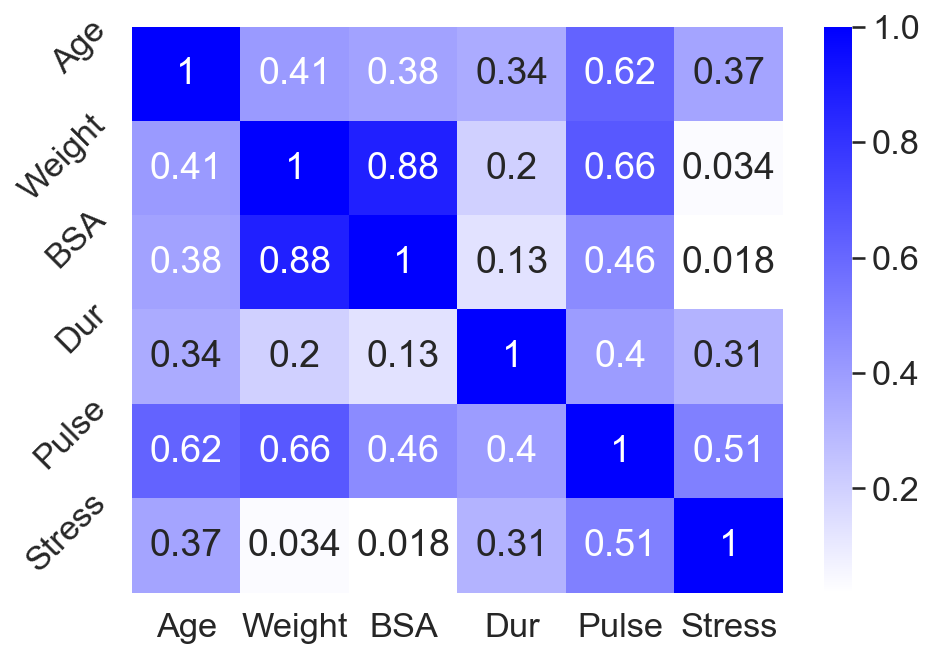

As an example of this behavior, consider predicting blood pressure using the data in A2. The predictors are age (years), weight (kilograms), body-surface area (square meters), duration of hypertension (years), basal pulse (beats per minute), and stress index. Since body-surface area (BSA) and weight are highly correlated (Figure ), we hypothesize that these data exhibit multicollinearity. (I’ll discuss a quantitative test for multicollinearity later.)

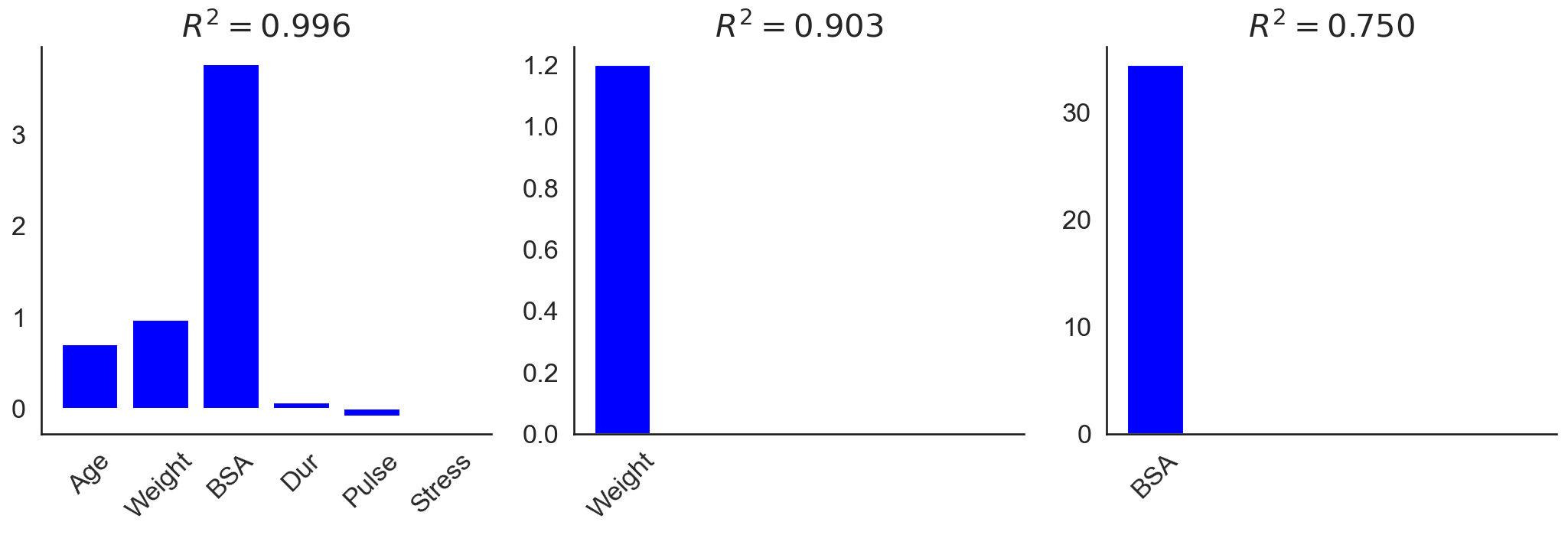

To see the affects of multicollinearity, I fit OLS to three different subsets of predictors: all predictors, only weight, and only BSA. As we can see in Figure , the OLS coefficients can be tricky to interpret. Based on the coefficients from fitting OLS to all predictors (Figure , left), we might expect BSA to be the most important feature for predicting blood pressure. However, looking at the coefficients for OLS fit to just weight (Figure , middle) and just BSA (Figure , right) complicates the picture. We find that weight is actually more predictive of blood pressure, as measured by the coefficient of determination . This is because BSA, weight, and blood pressure are all correlated, but weight is more highly correlated with blood pressure than BSA is. However, when both predictors are included in the model, multicollinearity complicates the analysis.

Also notice that the BSA-only model’s coefficient is quite large. My understanding is that extreme values, as well as sign changes, suggest that a data set may suffer from multicollinearity.

Unstable parameter estimates

The second problem of multicollinearity is that our parameter estimates will be algorithmically unstable. To see this, let’s quantify multicollinearity using the condition number of a matrix. In general, a well-conditioned problem is a problem in which a small change in the input results in a small change in the output . An ill-conditioned problem is a problem in which a small change in the input results in a large change in the output .

The condition number of a matrix , denoted , is the ratio of its smallest to largest singular values,

where are the singular values of . As we can see, when the smallest singular value is close to zero, the condition number of is big.

But what’s the intuition behind this definition? Consider the singular value decomposition (SVD) of a matrix :

where and are orthogonal matrices, and is a diagonal matrix of singular values:

Then the inverse of can be written as

where, since is a diagonal matrix, it’s inverse is just the inverse of its diagonal elements:

As we can see, when any singular value of is zero, the matrix inverse does not exist. And when any singular value is small, then matrix inversion can be numerically unstable. This is because is sensitive to the value of because we are representing continuous values with discrete machines. For example, a small increase in might result in numerical underflow.

Thus, the size of the singular values matters for both the mathematical existence of the matrix inverse , as well as algorithmic stability of computing that inverse even when it does technically exist, i.e. when no singular value is exactly zero. See (Trefethen & Bau III, 1997) for a thorough discussion of condition numbers and algorithmic stability.

This is why Python libraries such statsmodels will warn us about eigenvalues (square of the singular values) if we fit OLS to data with multicollinearity, e.g.:

import numpy as np

import statsmodels.api as sm

N = 100

X = np.random.normal(size=(N, 1))

X = np.hstack([X, 2*X])

y = np.random.normal(size=N)

ols = sm.OLS(y, sm.add_constant(X))

res = ols.fit()

print(res.summary())

# The smallest eigenvalue is 1.88e-30. This might indicate that there are

# strong multicollinearity problems or that the design matrix is singular.

It’s not necessarily correlation

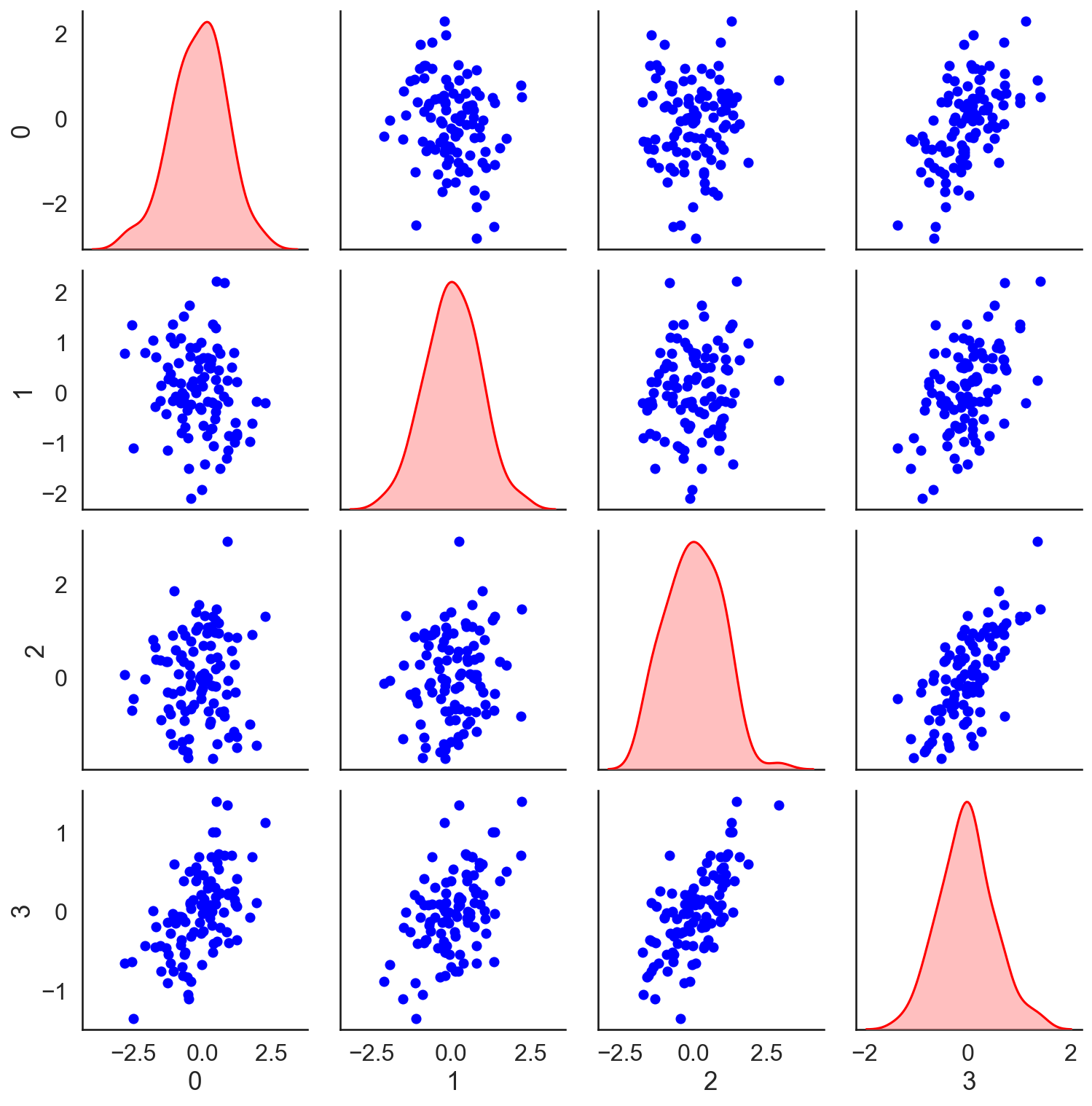

As a caveat, note that multicollinearity is about linear dependence between columns , which is not the same thing as correlation between the predictors, although it often is. In fact, we can have a set of columns of that are multicollinear without significant correlation between predictors. Consider, for example, three i.i.d. Gaussian random vectors and one vector that is the average of the previous three. The vectors are not highly correlated (Figure ), but the design matrix has a large condition number. Running this experiment once on my machine, the condition number of the correlation matrix of the first three predictors (the top left block of Figure ) is approximately . The condition number of all four predictors is approximately .

Treatment

Now that we understand multicollinearity and how to detect it, how can we treat the issue? An obvious thing to try would be to just drop a predictor if it is highly correlated with another one. However, this may not be the best approach. As we saw above, it is possible for a set of predictors to exhibit multicollinearity without any two predictors being too highly correlated. Furthermore, all things being equal, we’d probably prefer to use data rather than discard it.

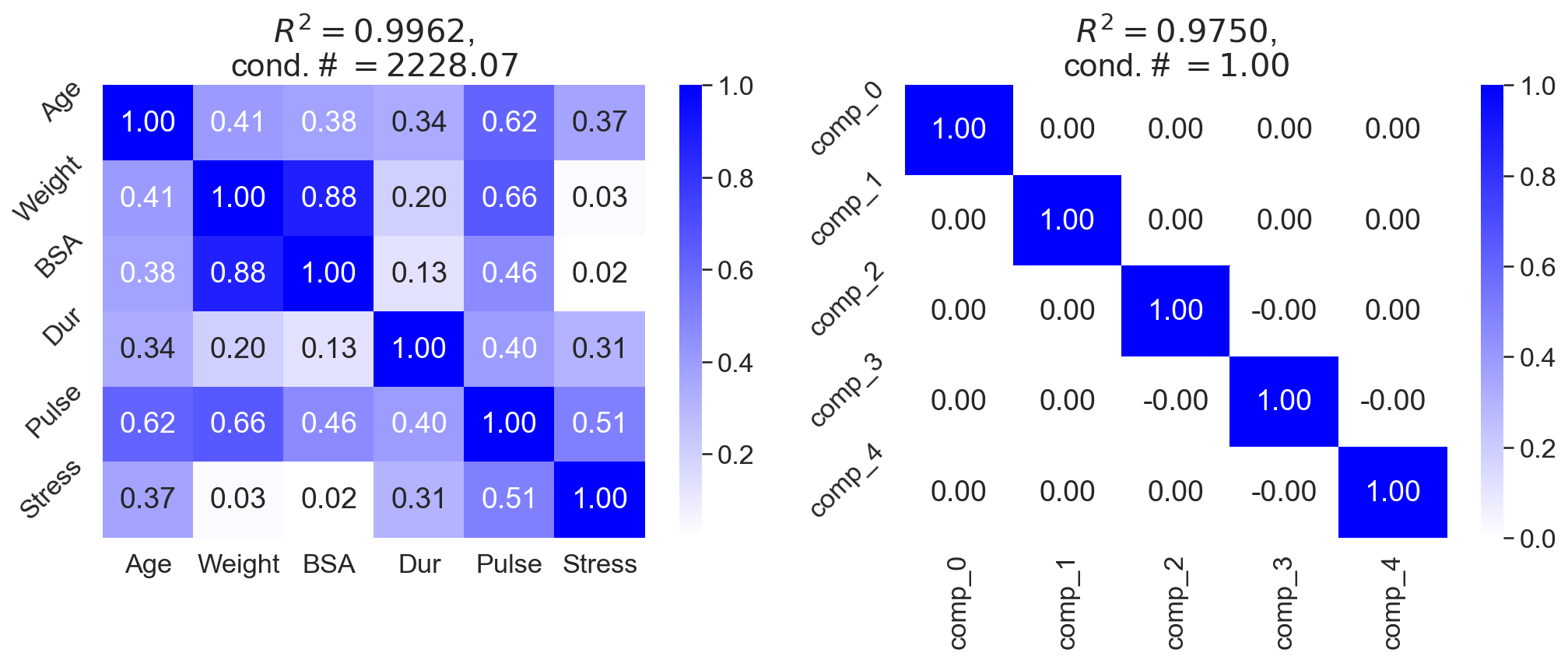

However, one useful treatment is to use dimension reduction. Methods such as partial least squares regression and principal component regression both perform linear dimension reduction on the predictors. For example, I fit OLS to both the blood pressure data (Figure , left) and the latent factors after fitting principal components analysis (PCA) to the blood pressure data (Figure , right). Both approaches have a similar coefficient of determination, but the second approach does not exhibit multicollinearity.

Another approach to mitigating the affects of multicollinearity is to use ridge regression. Recall that ridge regression introduces Tikhonov regularization or an -norm penalty to the weights . Ridge regression is so-named because the new optimization amounts to adding values along the diagonal (or “ridge”) of the covariance matrix,

where is a hyperparameter. Adding elements to the diagonal of ensures that the matrix is full rank, and thus invertible. This is also useful in low-data scenarios, when .

Conclusion

Multicollinearity is an important challenge when fitting and interpreting linear models. The phenomenon is not necessarily about correlation between predictors, and it is not necessarily an issue for the goodness-of-fit or the quality of the predictions. Rather, multicollinearity is about linear dependence between predictors in the design matrix , which results in numerical instability when estimating through inverting the Gram matrix . We can detect multicollinearity by analyzing the singular values of , and we can mitigate its effects through techniques such as dimension reduction and regularization.

Appendix

A1. Proof that is invertible iff is full rank

Any Gram matrix is uninvertible if and only if the columns of are linearly dependent. This is straightforward to prove. First, assume that the columns of are linearly dependent. By definition of linear dependence, there exists some vector such that . It follows immediately that

Since by assumption, is uninvertible. The last step is because one definition of an invertible matrix is that the equation only has the trivial solution . Now assume that is uninvertible. So there exists a vector such that . Then

Therefore while . The columns of are linearly dependent.

A2. Blood pressure data

These data are taken from here:

Pt BP Age Weight BSA Dur Pulse Stress

1 105 47 85.4 1.75 5.1 63 33

2 115 49 94.2 2.10 3.8 70 14

3 116 49 95.3 1.98 8.2 72 10

4 117 50 94.7 2.01 5.8 73 99

5 112 51 89.4 1.89 7.0 72 95

6 121 48 99.5 2.25 9.3 71 10

7 121 49 99.8 2.25 2.5 69 42

8 110 47 90.9 1.90 6.2 66 8

9 110 49 89.2 1.83 7.1 69 62

10 114 48 92.7 2.07 5.6 64 35

11 114 47 94.4 2.07 5.3 74 90

12 115 49 94.1 1.98 5.6 71 21

13 114 50 91.6 2.05 10.2 68 47

14 106 45 87.1 1.92 5.6 67 80

15 125 52 101.3 2.19 10.0 76 98

16 114 46 94.5 1.98 7.4 69 95

17 106 46 87.0 1.87 3.6 62 18

18 113 46 94.5 1.90 4.3 70 12

19 110 48 90.5 1.88 9.0 71 99

20 122 56 95.7 2.09 7.0 75 99

- Trefethen, L. N., & Bau III, D. (1997). Numerical linear algebra (Vol. 50). Siam.