A Python Implementation of the Multivariate Skew Normal

I needed a Python implementation of the multivariate skew normal. I wrote one based on SciPy's multivariate distributions module.

Probability density function

Curiously enough, SciPy does not have an implementation of the multivariate skew normal distribution. This is surprising since the probability density function (PDF) is a simple function of a multivariate PDF and a univariate cumulative distribution function (CDF):

where is the -variate normal density with zero mean and correlation matrix and is the CDF of the univariate spherical Gaussian, . This is easy to implement in Python using NumPy and SciPy:

import numpy as np

from scipy.stats import (multivariate_normal as mvn,

norm)

from scipy.stats._multivariate import _squeeze_output

class multivariate_skewnorm:

def __init__(self, shape, cov=None):

self.dim = len(shape)

self.shape = np.asarray(shape)

self.mean = np.zeros(self.dim)

self.cov = np.eye(self.dim) if cov is None else np.asarray(cov)

def pdf(self, x):

return np.exp(self.logpdf(x))

def logpdf(self, x):

x = mvn._process_quantiles(x, self.dim)

pdf = mvn(self.mean, self.cov).logpdf(x)

cdf = norm(0, 1).logcdf(np.dot(x, self.shape))

return _squeeze_output(np.log(2) + pdf + cdf)

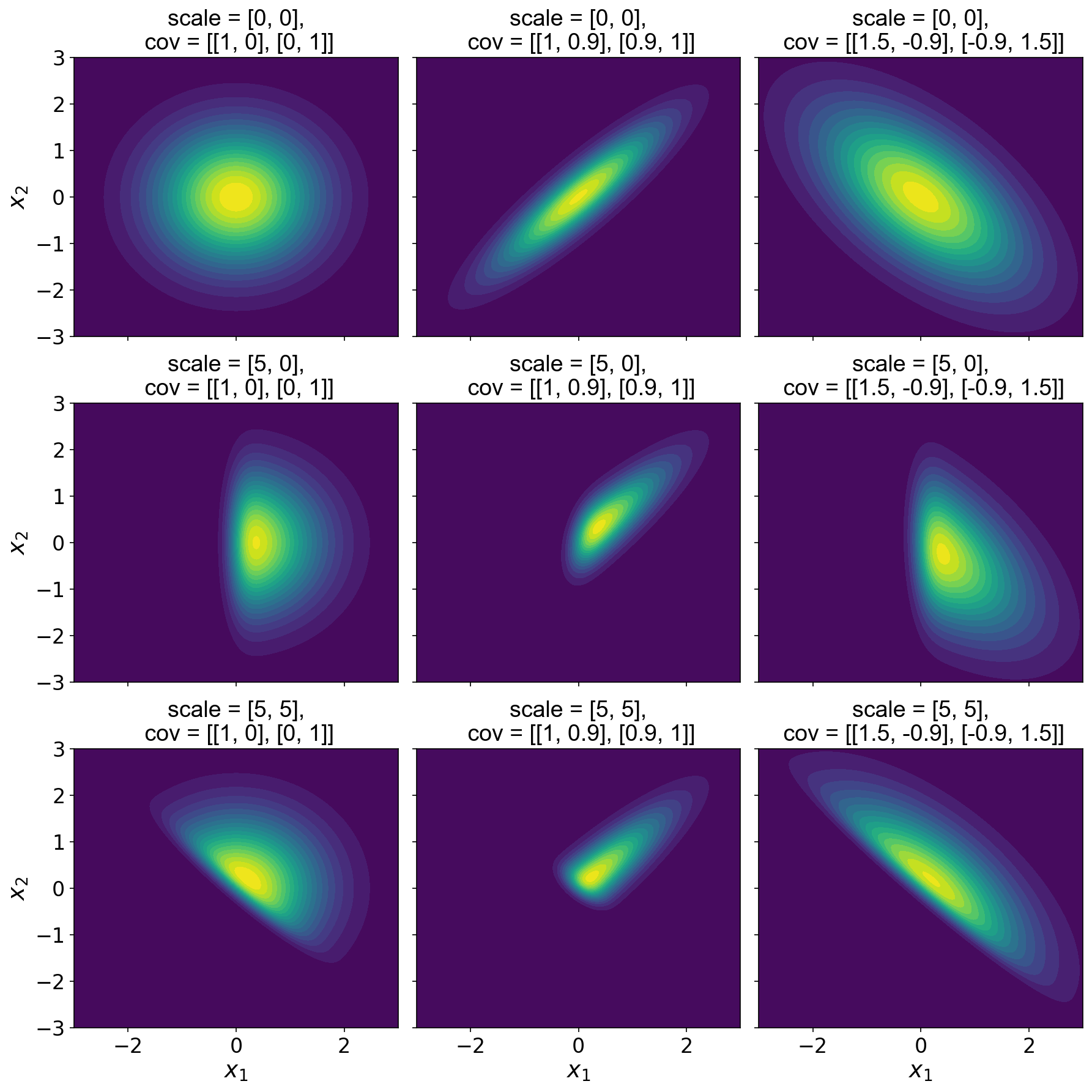

In logpdf, we use SciPy’s _process_quantiles to verify that the last dimension of x is the data dimension. We must also handle a new parameter, the correlation matrix between the variables. To illustrate this code, I’ve plotted a number of multivariate skew normal distributions over varying shape and correlation parameters (Figure ).

As we can see, when is a vector of zeros, the CDF evaluates to , and Eq. reduces to a -variate normal with zero mean and correlation matrix . So the first rows in Figure are just multivariate normal distributions. When the first component of is positive, the first component of is skewed (second row) while maintaining the correlation structure of the “underlying” Gaussian. Finally, when both values of are large, we see that both dimensions are skewed (third row). Of course, the components of can also be negative to induce negative skew.

Sampling

To sample from skew normal distribution, we could use rejection sampling. I found this idea from this StackOverflow. This is because

since is a CDF and therefore in the range . In other words, we simply sample from the a spherical Gaussian and then reject if that sample is larger than . We can extend the previous class with the following method:

def rvs_slow(self, size=1):

std_mvn = mvn(np.zeros(self.dim),

np.eye(self.dim))

x = np.empty((size, self.dim))

# Apply rejection sampling.

n_samples = 0

while n_samples < size:

z = std_mvn.rvs(size=1)

u = np.random.uniform(0, 2*std_mvn.pdf(z))

if not u > self.pdf(z):

x[n_samples] = z

n_samples += 1

# Rescale based on correlation matrix.

chol = np.linalg.cholesky(self.cov)

x = (chol @ x.T).T

return x

However, this approach is slow, and there is a faster way to sample. In (Azzalini & Capitanio, 1999), the authors propose the following. First, let

Then if define as

Then is skew normal with shape and correlation matrix . Since we never reject a sample, this can be easily vectorized:

def rvs_fast(self, size=1):

aCa = self.shape @ self.cov @ self.shape

delta = (1 / np.sqrt(1 + aCa)) * self.cov @ self.shape

cov_star = np.block([[np.ones(1), delta],

[delta[:, None], self.cov]])

x = mvn(np.zeros(self.dim+1), cov_star).rvs(size)

x0, x1 = x[:, 0], x[:, 1:]

inds = x0 <= 0

x1[inds] = -1 * x1[inds]

return x1

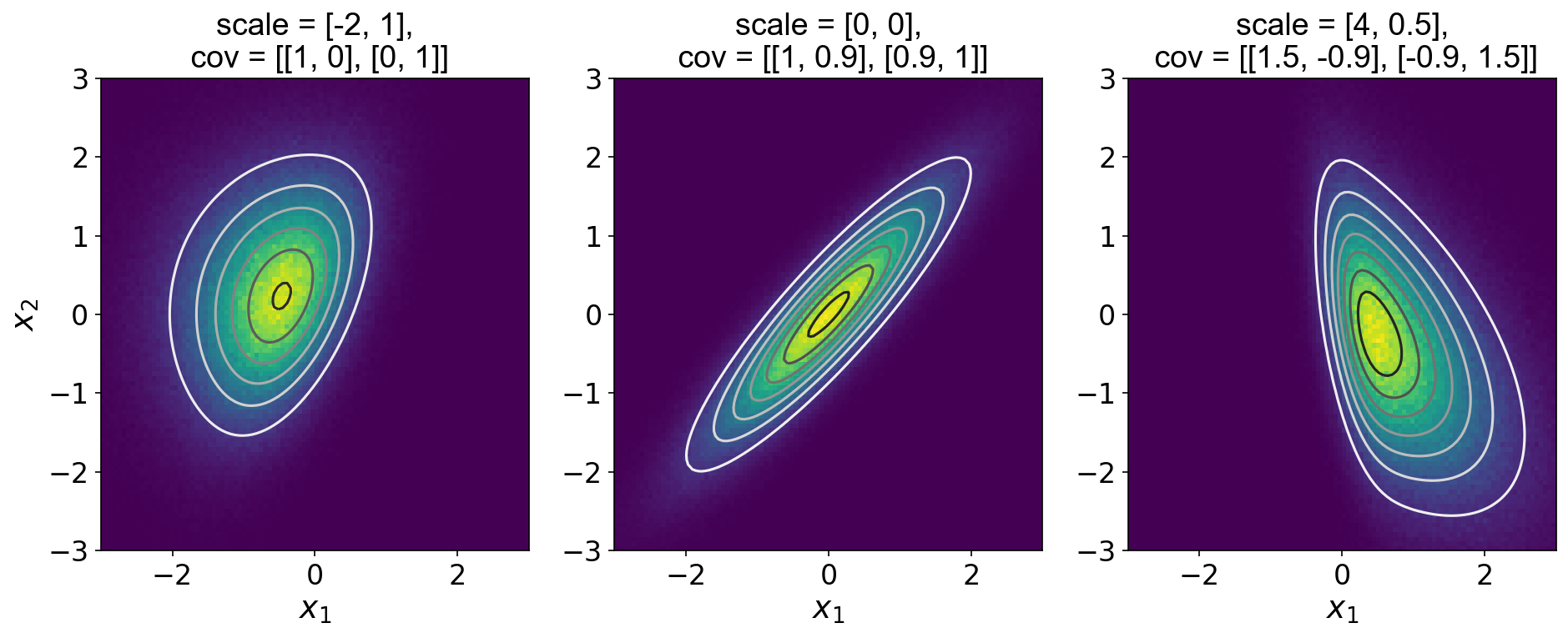

To verify this code, I generated Figure , which plots one million samples from a few different skew normal distributions along with the groundtruth PDF.

- Azzalini, A., & Capitanio, A. (1999). Statistical applications of the multivariate skew normal distribution. Journal of the Royal Statistical Society: Series B (Statistical Methodology), 61(3), 579–602.