The Unscented Transform

The unscented transform, most commonly associated with the nonlinear Kalman filter, was proposed by Jeffrey Uhlmann to estimate a nonlinear transformation of a Gaussian. I illustrate the main idea.

The unscented transform (UT) was originally prosed by Jeffrey Uhlmann as part of his PhD thesis (Uhlmann, 1995), although it is most well-known as a component in the unscented Kalman filter (Julier & Uhlmann, 1997; Wan & Van Der Merwe, 2000). The basic premise of the UT is that it easier to approximate a Gaussian distribution than it is to approximate an arbitrary density after a nonlinear transformation. This is a surprisingly simple and useful idea.

Imagine you have some -dimensional Gaussian distributed variables,

you and want to estimate their density after applying a nonlinear transformation to them:

How would we do this? If the transformation were linear, such as

this would be easy, since an affine transformation of a Gaussian is also a Gaussian. We could estimate the density of in closed form. This is one reason why so many probabilistic models rely on linear-Gaussian assumptions (Roweis & Ghahramani, 1999). For example, in a Kalman filter, we might have some state estimation that we posit is Gaussian distributed. Linear dynamics means we propogate that estimation—and our model uncertainty—forward in time using a linear map. However, it’s less obvious what to do in Eq. , when our transformation is nonlinear.

The UT is the following: compute a subset of points, called sigma points , and propogate them through the nonlinear map . Then estimate a Gaussian distribution as

where and are the sample mean and covariance, respectively. Imagine that this worked; it would be nice because it is relatively cheap, since there are only sigma points. This scales well w.r.t. the dimensions of our data, compared to other methods such as Monte Carlo sampling. While there are different proposals for how to choose sigma points, let’s use the simplest one, proposed by Uhlmann in his PhD thesis.

First, let denote the scaled Cholesky decomposition of the covariance matrix of or

Then the sigma points are

What is this doing? Speaking loosely, a positive definite matrix can be thought of as a multidimensional generalization of a positive number, and the Cholesky decomposition is then a multidimensional generalization of the square root. So we’re taking the square root of our unscaled covariance matrix, i.e. computing a multidimensional standard deviation. Eq. says that our sigma points are the sample mean of , as well as points that are one standard deviation away from that mean in both directions in all dimensions . This is why there are sigma points.

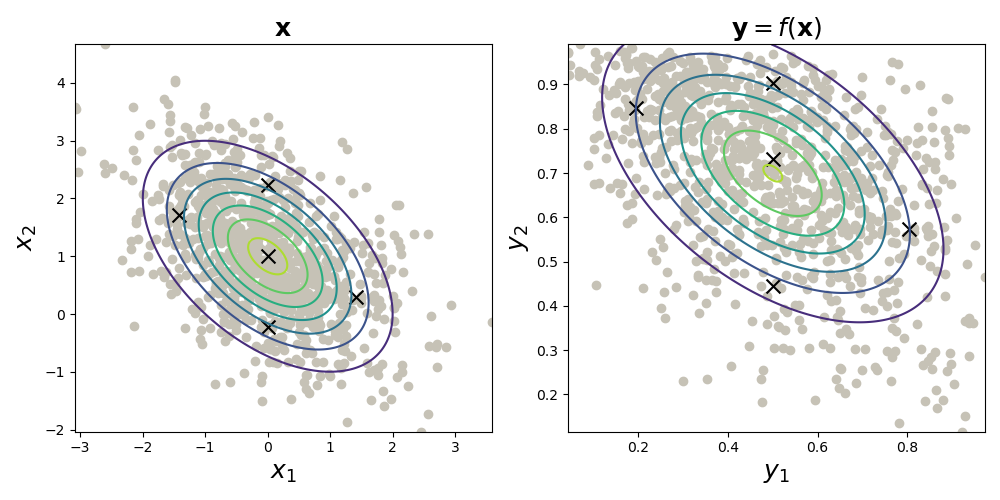

While that’s a mouthful, it’s easy to visualize (Fig. , left). And to estimate a Gaussian density, we simply take the sample mean and sample covariance of (Fig. , right). Here, I’ve used the logistic sigmoid function for .

That’s it. That’s the essence of the UT. There are natural extensions to this idea, such as different ways of computing the sigma points; weighting the sigma points after transforming them; and using this method to estimate non-Gaussian densities. But I think Fig. nicely captures the main idea.

- Uhlmann, J. K. (1995). Dynamic map building and localization: New theoretical foundations [PhD thesis]. University of Oxford Oxford.

- Julier, S. J., & Uhlmann, J. K. (1997). New extension of the Kalman filter to nonlinear systems. Signal Processing, Sensor Fusion, and Target Recognition VI, 3068, 182–193.

- Wan, E. A., & Van Der Merwe, R. (2000). The unscented Kalman filter for nonlinear estimation. Proceedings of the IEEE 2000 Adaptive Systems for Signal Processing, Communications, and Control Symposium (Cat. No. 00EX373), 153–158.

- Roweis, S., & Ghahramani, Z. (1999). A unifying review of linear Gaussian models. Neural Computation, 11(2), 305–345.