The Euler–Lagrange Equation

The physics of Hamiltonian Monte Carlo, part 1: Lagrangian and Hamiltonian mechanics are based on the principle of stationary action, formalized by the calculus of variations and the Euler–Lagrange equation. I discuss this result.

Originally proposed by (Duane et al., 1987), Hybrid Monte Carlo is a Metropolis–Hastings algorithm in which each step is chosen according to the energy in a simulated Hamiltonian dynamical system. This method is now called Hamiltonian Monte Carlo, and the acronym “HMC” remains unchanged. The advantage of HMC is that it can propose distance states that still have high probabilities of being accepted. It is a popular choice over a Gaussian random walk, which may converge more slowly. However, understanding HMC depends on understanding the Hamiltonian reformulation of classical mechanics. While (Neal, 2011) and (Betancourt, 2017) are excellent introductions to HMC, they both expect the reader to already understand Hamilton’s equations. In my mind, Hamiltonian mechanics is the conceptual linchpin that gives any intuition for why the method works. My goal is to bridge this conceptual gap. I hope this is useful for others who are interested in HMC but lack a sufficient background in physics.

This is the first post in a three-part series. In this post, I will frame and motivate the problem that the Euler–Lagrange equation solves, finding the stationary function of a functional. This class of problems is called the calculus of variations. In the next post, I will explain how Lagrangian and Hamiltonian mechanics use the Euler–Lagrange equation to reformulate of Newtonian mechanics. The basic idea will be to reformulate classical mechanics, which models a system of particles by specifying all the system’s forces, as a model in which the total energy is conserved. Finally, in the last post, I will discuss Hamiltonian dynamics in the context of HMC.

With this outline in mind, let’s start with the calculus of variations.

An example of a variational problem

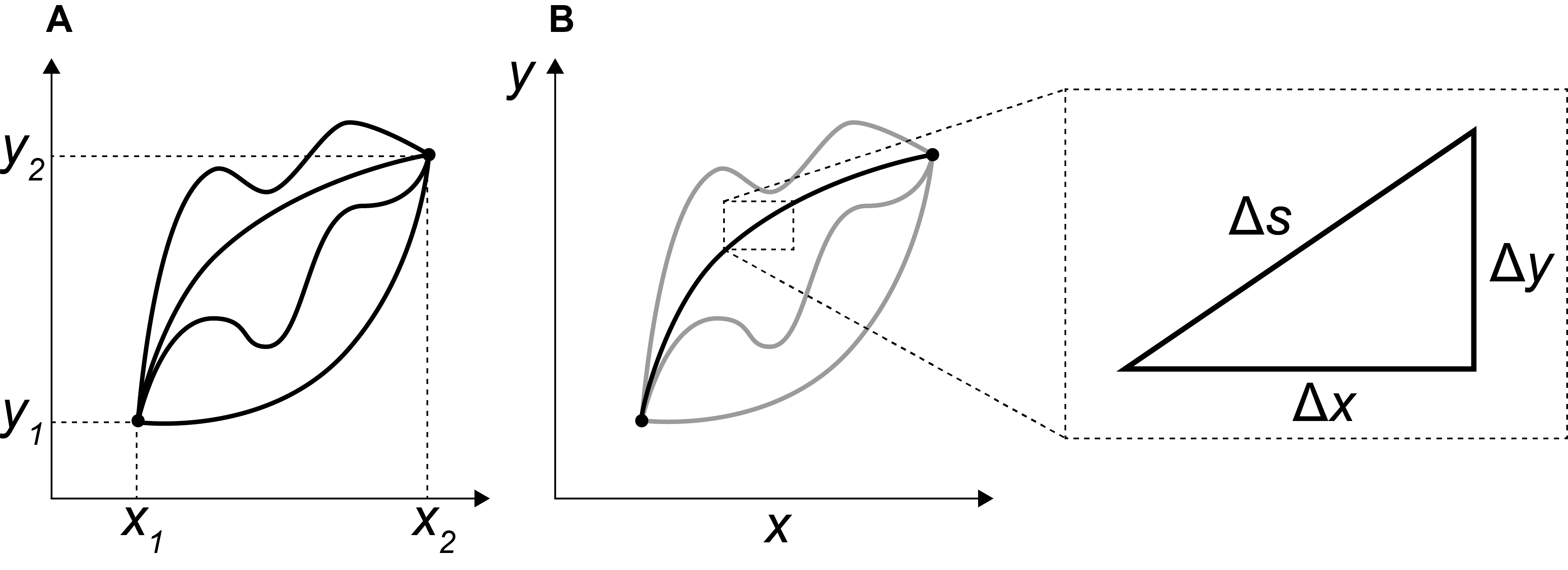

Let’s introduce the calculus of variations through an example. What is the shortest path between two points on a plane, and ? We may intuit that the answer is a straight line, but there are an infinite number of such paths. Can we prove it? One way to formalize the problem is to consider all functions described by the equation

subject to the constraint that

In words, the function can be arbitrarily curved, but it must be fixed at the endpoints (Figure ). We will see that also needs to be continuously differentiable. If we imagine zooming in on a single path (Figure ), we can approximate a finite but very small segments along that path as where

by the Pythagorean theorem. This suggests an infinitesimal representation in terms of the derivative of the function ,

In words, is the infinitesimal distance traveled by infinitesimal changes in the -coordinates as quantified by the first derivative . The total length of the path is given by integrating between the two endpoints,

Finding the shortest path is equivalent to finding the solution of the following minimization problem: among all functions that satisfy the contraint , find the one which minimizes .

The integrated term in is called a functional. If every number in a set corresponds to a number , we say there is defined on the set a function and that is its domain. A functional is a natural generalization of this concept where is a set of mathematical objects of any kind. Thus, a generalization of is

This notation means that the functional may have an explicit dependence on , in addition to the implicit dependencies through , , and so forth. In our example, the functional only depends on .

Since maximizing is equivalent to minimizing , the distinction between maxima and minima is not interesting for functionals, and without loss of generality we focus on minimization. In our case, outputs distance, and therefore minimizing the integral minimizes total distance. However, if were an expression of time, then minimizing would represent minimizing total time. For example, Fermat’s principle, also called the principle of least time, states that the path a ray takes between two points minimizes total time. The Brachistochrone curve is the curve of fastest descent, assuming a frictionless surface and a uniform gravitional field.

These types of problems can all be solved using the calculus of variations. In elementary calculus, a function is stationary when its derivative is zero at a point , meaning it attains a minimum or maximum. In the calculus of variations, a functional is stationary for a particular function when it attains a minimum or maximum.

According to (Aleksandrov et al., 1999), this branch of mathematics became important after connections were made to many problems in physics and classical mechanics. This is because, as we will see, a function that is the stationary function of a functional must satisfy a certain differential equation, the Euler–Lagrange equation; and many problems in physics take the form of differential equations. Thus, the calculus of variations is fascinating because the most famous problems in the field seem to suggest that nature often “knows” to take the most efficient path. This is called the variational principle.

The Euler–Lagrange equation

In calculus, a necessary condition for an extremum of a differentiable function at a point is that . This is easy to visualize: at the top of a hill or the bottom of a valley, a line tangent to the curve is horizontal. Equivalently, the differential of must be zero: . The Euler–Lagrange equation will make a statement that is analogous to but for functionals rather than functions. This is a powerful result because it allows us to exclude any function that does not satisfy this criteria from having extrema.

Given a function , the simplest integral in the calculus of variations, what is the extremum value

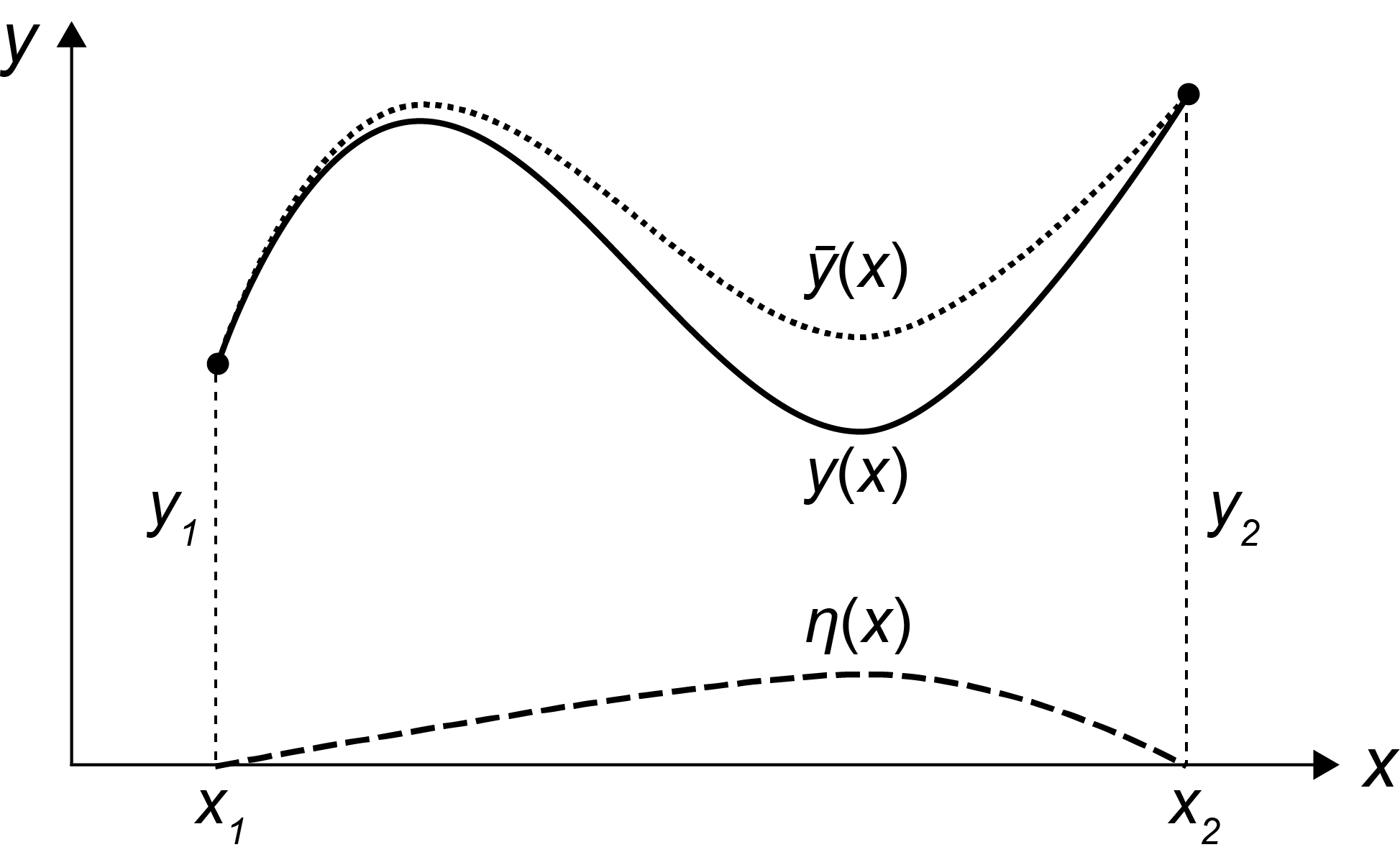

To answer this question, we will use a simple but clever idea. Imagine we have a function that has the same endpoints as but is otherwise possibly offset,

Let be an arbitrary value. Then we need the constraint

This constraint ensures that the functions and share endpoints regardless of the value of or the shape of (Figure ). Furthermore, we assume that is continuously differentiable. The term is called the variational parameter; note that as , the original function is recovered from .

Let’s take the derivative of with respect to the evaluated at zero, set that derivative equal to zero, and then simply. The resulting expression will be the Euler–Lagrange equation, a second-order partial differential equation that expresses the function in terms of its extremum or the stationary point for the functional in .

First, let’s take the derivatives of and with respect to :

Next, taking the derivative of evaluated at and setting it equal to zero, we get

Now we can use integration by parts for the rightmost integral:

Note that based on , when we set , then . Giving us

The term on line cancels because we assume that . We want the above equation to be a minimum for any . This only holds when the bracketed term is zero or

This is the Euler–Lagrange equation. It is a second-order differential equation with respect to the function . If minimizes in , it must satsify . This is analogous to the condition that in ordinary calculus.

The shortest distance

Armed with the Euler–Lagrange equation, let’s solve our example: what is the shortest distance between two points on a plane? Using the definition of in , we want to solve for . First, we know that the leftmost term in is zero or because does not depend on . Next, let’s solve the rightmost partial derivative in :

Putting both results together, we get

Using the quotient rule, we get

If , then is a constant and is a straight line. Put conservely, must be a straight line to satisfy , which is completely analogous to the calculus statement that a fixed point must satisfy the condition . We have proven that the shortest distance between two points is a straight line.

Conclusion

The goal of this post was provide some intuition for and then derive the Euler–Lagrange equation in . This is the foundational idea of the calculus of variations, which has many applications in physics. In the next post, we will use this variational framework to show how Newtonian mechanics can be reformulated into Lagrangian and Hamiltonian mechanics. We will introduce specific functions, called “Lagrangians”, that, when plugged into the Euler–Lagrange equation, reduce to Newton’s laws of motion. Rather than thinking about objects and forces across time, we will think about energy being conserved across time. This will provide good intuition for the final post on Hamiltonian Monte Carlo.

Acknowledgements

I thank Shivani Pandey and Federico Errica for reporting typos.

- Duane, S., Kennedy, A. D., Pendleton, B. J., & Roweth, D. (1987). Hybrid monte carlo. Physics Letters B, 195(2), 216–222.

- Neal, R. M. (2011). MCMC using Hamiltonian dynamics.

- Betancourt, M. (2017). A conceptual introduction to Hamiltonian Monte Carlo. ArXiv Preprint ArXiv:1701.02434.

- Aleksandrov, A. D., Lavrent’ev, M. A., & others. (1999). Mathematics: its content, methods and meaning. Courier Corporation.