Gaussian Process Regression with Code Snippets

The definition of a Gaussian process is fairly abstract: it is an infinite collection of random variables, any finite number of which are jointly Gaussian. I work through this definition with an example and provide several complete code snippets.

When I first learned about Gaussian processes (GPs), I was given a definition that was similar to the one by (Rasmussen & Williams, 2006):

Definition 1: A Gaussian process is a collection of random variables, any finite number of which have a joint Gaussian distribution.

At the time, the implications of this definition were not clear to me. What helped me understand GPs was a concrete example, and it is probably not an accident that both Rasmussen and Williams and Bishop (Bishop, 2006) introduce GPs by using Bayesian linear regression as an example. Following the outlines of these authors, I present the weight-space view and then the function-space view of GP regression. The ultimate goal of this post is to concretize this abstract definition. I provide small, didactic implementations along the way, focusing on readability and brevity.

Weight-space view

In standard linear regression, we have

where our predictor is just a linear combination of the covariates for the th sample out of observations. We can make this model more flexible with fixed basis functions,

where

Note that in Equation , , while in Equation , . Now consider a Bayesian treatment of linear regression that places prior on ,

where is a diagonal precision matrix. In my mind, Bishop is clear in linking this prior to the notion of a Gaussian process. He writes, “For any given value of , the definition [Equation ] defines a particular function of . The probability distribution over defined by [Equation ] therefore induces a probability distribution over functions .” In other words, if is random, then is random as well. This example demonstrates how we can think of Bayesian linear regression as a distribution over functions. Thus, we can either talk about a random variable or a random function induced by .

In principle, we can imagine that is an infinite-dimensional function since we can imagine infinite data and an infinite number of basis functions. However, in practice, we are really only interested in a finite collection of data points. Let

and let be a matrix such that . Then we can rewrite as

Recall that if are independent Gaussian random variables, then the linear combination is also Gaussian for every , and we say that are jointly Gaussian. Since each component of (each ) is a linear combination of independent Gaussian distributed variables (), the components of are jointly Gaussian. Therefore, we can uniquely specify the normal distribution of by computing its mean vector and covariance matrix, which we can do (A1):

If we define as , then we can say that is a Gram matrix such that

where is called a covariance or kernel function,

Note that this lifting of the input space into feature space by replacing with is the kernel trick. Also, keep in mind that we did not explicitly choose ; it simply fell out of the way we setup the problem. In other words, Bayesian linear regression is a specific instance of a Gaussian process, and we will see that we can choose different mean and kernel functions to get different types of GPs.

Rasmussen and Williams’s presentation of this section is similar to Bishop’s, except they derive the posterior , and show that this is Gaussian, whereas Bishop relies on the definition of jointly Gaussian. I prefer the latter approach, since it relies more on probabilistic reasoning and less on computation.

Function-space view

Following the outline of Rasmussen and Williams, let’s connect the weight-space view from the previous section with a view of GPs as functions. These two interpretations are equivalent, but I found it helpful to connect the traditional presentation of GPs as functions with a familiar method, Bayesian linear regression.

Now, let us ignore the weights and instead focus on the function . Furthermore, let’s talk about variables instead of to emphasize our interpretation of functions as random variables. We noted in the previous section that a jointly Gaussian random variable is fully specified by a mean vector and covariance matrix. Alternatively, we can say that the function is fully specified by a mean function and covariance function such that

This is the standard presentation of a Gaussian process, and we denote it as

At this point, Definition , which was a bit abstract when presented ex nihilo, begins to make more sense. With a concrete instance of a GP in mind, we can map this definition onto concepts we already know. The collection of random variables is or , and it can be infinite because we can imagine infinite or endlessly increasing data. And we have already seen how a finite collection of the components of can be jointly Gaussian and are therefore uniquely defined by a mean vector and covariance matrix. We can model more flexible functions by constructing the covariance matrix with different kernel functions.

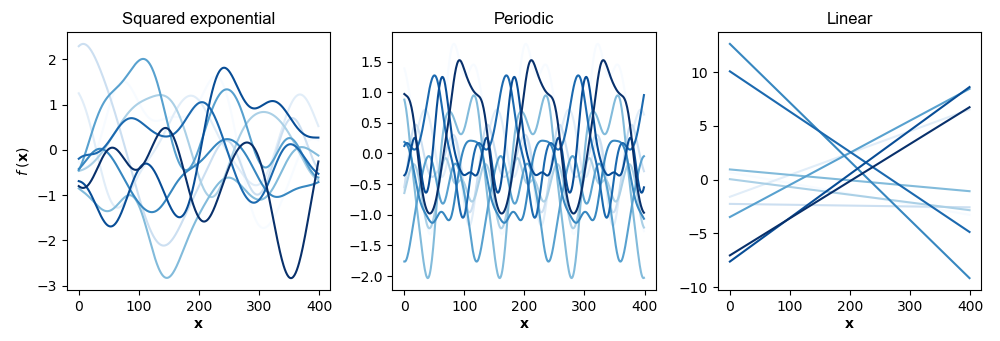

Since we are thinking of a GP as a distribution over functions, let’s sample functions from it (Equation ). To do so, we need to define mean and covariance functions. Let’s use for the mean function, and instead focus on the effect of varying the kernel. Consider these three kernels,

taken from David Duvenaud’s “Kernel Cookbook”. Our data is evenly spaced real numbers between and . Note that GPs are often used on sequential data, but it is not necessary to view the index for as time nor do our inputs need to be evenly spaced. To sample from the GP, we first build the Gram matrix . Let denote the kernel function on a set of data points rather than a single observation, be training data, and be test data. Then sampling from the GP prior is simply,

In the absence of data, test data is loosely “everything” because we haven’t seen any data points yet. Figure shows samples of functions defined by the three kernels above.

In my mind, Figure makes clear that the kernel is a kind of prior or inductive bias. Given the same data, different kernels specify completely different functions. See A2 for the abbreviated code to generate this figure.

Prediction

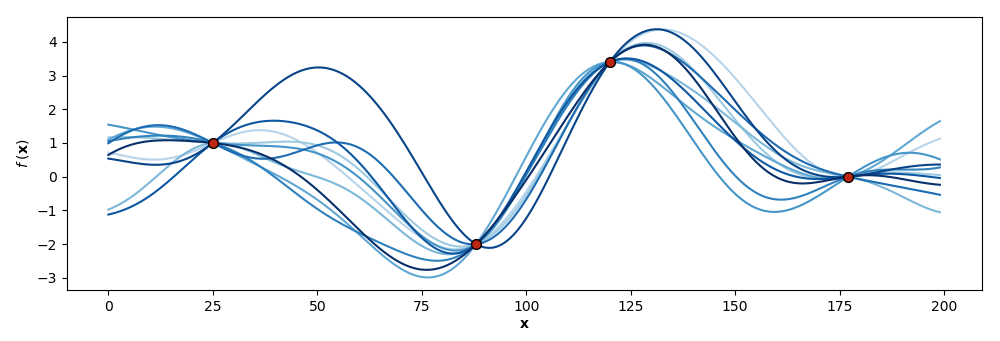

Ultimately, we are interested in prediction or generalization to unseen test data given training data. Intuitively, what this means is that we do not want just any functions sampled from our prior; rather, we want functions that “agree” with our training data (Figure ).

There is an elegant solution to this modeling challenge: conditionally Gaussian random variables. Recall that a GP is actually an infinite-dimensional object, while we only compute over finitely many dimensions. This means the the model of the concatenation of and is

where for ease of notation, we assume . Using basic properties of multivariate Gaussian distributions (see A3), we can compute

While we are still sampling random functions , these functions “agree” with the training data. To see why, consider the scenario when ; the mean and variance in Equation are

In other words, the variance at the training data points is (non-random) and therefore the random samples are exactly our observations .

See A4 for the abbreviated code to fit a GP regressor with a squared exponential kernel. This code will sometimes fail on matrix inversion, but this is a technical rather than conceptual detail for us. Rasmussen and Williams (and others) mention using a Cholesky decomposition, but this is beyond the scope of this post.

Noisy observations

In Figure , we assumed each observation was noiseless—that our measurements of some phenomenon were perfect—and fit it exactly. But in practice, we might want to model noisy observations,

where is i.i.d. Gaussian noise or . Then Equation becomes

while Equation becomes

where

Mathematically, the diagonal noise adds “jitter” to so that . In other words, the variance for the training data is greater than . The reader is encouraged to modify the code to fit a GP regressor to include this noise.

Uncertainty

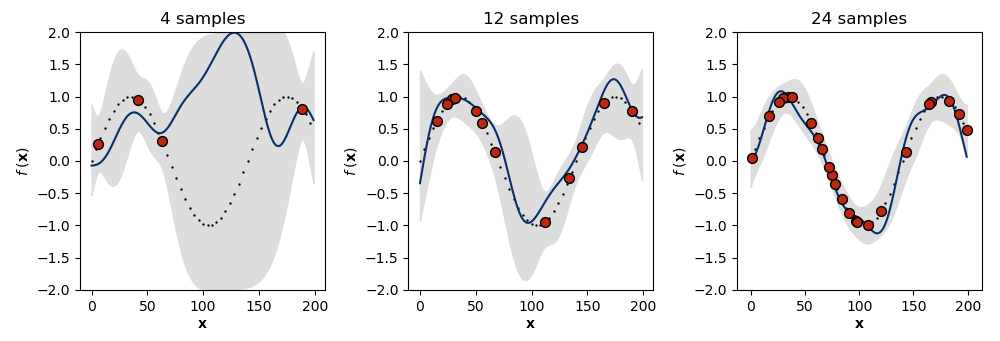

An important property of Gaussian processes is that they explicitly model uncertainty or the variance associated with an observation. This is because the diagonal of the covariance matrix captures the variance for each data point. This diagonal is, of course, defined by the kernel function. For example, the squared exponential is clearly when , while the periodic kernel’s diagonal depends on the parameter . However, recall that the variance of the conditional Gaussian decreases around the training data, meaning the uncertainty is clamped, speaking visually, around our observations.

One way to understand this is to visualize two times the standard deviation ( confidence interval) of a GP fit to more and more data from the same generative process (Figure ). We can see that in the absence of much data (left), the GP falls back on its prior, and the model’s uncertainty is high. However, as the number of observations increases (middle, right), the model’s uncertainty in its predictions decreases. If we modeled noisy observations, then the uncertainty around the training data would also be greater than and could be controlled by the hyperparameter . See A5 for the abbreviated code required to generate Figure .

Conclusion

There is a lot more to Gaussian processes. I did not discuss the mean function or hyperparameters in detail; there is GP classification (Rasmussen & Williams, 2006), inducing points for computational efficiency (Snelson & Ghahramani, 2006), and a latent variable interpretation for high-dimensional data (Lawrence, 2004), to mention a few. However, a fundamental challenge with Gaussian processes is scalability, and it is my understanding that this is what hinders their wider adoption. Exact inference is hard because we must invert a covariance matrix whose sizes increases quadratically with the size of our data, and that operation (matrix inversion) has complexity . At present, the state of the art is still on the order of a million data points (Wang et al., 2019).

Appendix

A1: Simplifying the mean vector and covariance matrix

First, note

Thus, the mean of is

and its covariance is

A2: Sampling from a GP prior

Below is abbreviated code—I have removed easy stuff like specifying colors—for Figure :

import numpy as np

import matplotlib.pyplot as plt

def squared_exponential_kernel(x1, x2):

return np.exp(-1/2. * np.linalg.norm([x1 - x2], 2)**2)

def periodic_kernel(x1, x2):

p = 3

return np.exp(-2 * np.sin((np.pi * np.abs([x1 - x2]))/p)**2)

def linear_kernel(x1, x2):

b = 1; c = 0

return b + ((x1 - c) * (x2 - c))

def apply_kernel(n, kernel):

K = np.empty((n, n))

for i in range(n):

x1 = X[i]

for j in range(n):

x2 = X[j]

K[i, j] = kernel(x1, x2)

return K

fig, axes = plt.subplots(1, 3)

cov_funcs = [squared_exponential_kernel, periodic_kernel, linear_kernel]

n_samples = 400

n_priors = 10

X = np.linspace(-5, 5, n_samples)

for kernel, ax in zip(cov_funcs, axes):

mean = np.zeros(n_samples)

cov = apply_kernel(n_samples, kernel)

for _ in range(n_priors+1):

f = np.random.multivariate_normal(mean, cov)

ax.plot(np.arange(n_samples), f)

plt.show()

A3: Sampling from a conditionally Gaussian distribution

Let and be jointly Gaussian random variables such that

Then the marginal distributions of is

while the conditional distribution is

This is a common fact that can be either re-derived or found in many textbooks.

A4: Fitting a Gaussian process regression model

The naive (and readable!) implementation for fitting a GP regressor is straightforward. Below is an implementation using the squared exponential kernel, noise-free observations, and NumPy’s default matrix inversion function:

import numpy as np

import matplotlib.pyplot as plt

def kernel(X, Y):

K = np.empty((len(X), len(Y)))

for i, x in enumerate(X):

for j, y in enumerate(Y):

K[i, j] = np.exp(-1/2. * np.linalg.norm([x - y], 2)**2)

return K

n_samples = 200

n_train = 10

gen_fn = lambda x: np.sin(2 * np.pi * 5 * x / 700)

inds = np.random.choice(n_samples, n_train)

Y_train = gen_fn(inds)

X_test = np.linspace(-5, 5, n_samples)

X_train = X_test[inds]

K_st = kernel(X_test, X_train)

K_tt = kernel(X_train, X_train)

K_ss = kernel(X_test, X_test)

K_ts = kernel(X_train, X_test)

mean = K_st @ np.linalg.inv(K_tt) @ Y_train

cov = K_ss - (K_st @ np.linalg.inv(K_tt) @ K_ts)

y_pred = np.random.multivariate_normal(mean, cov)

plt.plot(np.arange(n_samples), y_pred)

plt.scatter(inds, Y_train)

plt.show()

A5: Modeling uncertainty

Below is code for plotting the uncertainty modeled by a Gaussian process for an increasing number of data points:

import numpy as np

import matplotlib.pyplot as plt

def kernel(X, Y):

kern = lambda x, y: np.exp(-1/2. * np.linalg.norm([x - y], 2)**2)

K = np.empty((len(X), len(Y)))

for i, x in enumerate(X):

for j, y in enumerate(Y):

K[i, j] = kern(x, y)

return K

def gen_fn(x):

return np.sin(2 * np.pi * 5 * x / 700)

def get_data(n_samples, n_train):

inds = []

while len(inds) < n_train:

r = np.random.randint(n_samples)

if r not in inds:

inds.append(r)

X_train_inds = np.array(inds)

Y_train = gen_fn(X_train_inds)

X_test = np.linspace(-5, 5, n_samples)

X_train = X_test[X_train_inds]

return X_train_inds, Y_train, X_test, X_train

fig, axes = plt.subplots(1, 3)

n_samples = 200

for ax, n_train in zip(axes.flat, [4, 12, 24]):

X_train_inds, Y_train, X_test, X_train = get_data(n_samples, n_train)

K_st = kernel(X_test, X_train)

K_tt = kernel(X_train, X_train)

K_ss = kernel(X_test, X_test)

K_ts = kernel(X_train, X_test)

noise = np.eye(KXX.shape[0]) * 0.05

mean = K_st @ np.linalg.inv(K_tt + noise) @ Y_train

cov = K_ss - (K_st @ np.linalg.inv(K_tt + noise) @ K_ts)

f_test = np.random.multivariate_normal(mean, cov)

ax.plot(np.arange(n_samples), f_test)

std = np.sqrt(np.diag(cov))

ax.fill_between(np.arange(n_samples), mean-2*std, mean+2*std)

ax.scatter(X_train_inds, Y_train)

x = np.arange(0, n_samples, 4)

ax.scatter(x, gen_fn(x))

plt.show()

- Rasmussen, C. E., & Williams, C. K. I. (2006). Gaussian processes for machine learning (Vol. 2, Number 3). MIT Press Cambridge, MA.

- Bishop, C. M. (2006). Pattern Recognition and Machine Learning.

- Snelson, E., & Ghahramani, Z. (2006). Sparse Gaussian processes using pseudo-inputs. Advances in Neural Information Processing Systems, 1257–1264.

- Lawrence, N. D. (2004). Gaussian process latent variable models for visualisation of high dimensional data. Advances in Neural Information Processing Systems, 329–336.

- Wang, K. A., Pleiss, G., Gardner, J. R., Tyree, S., Weinberger, K. Q., & Wilson, A. G. (2019). Exact Gaussian Processes on a Million Data Points. ArXiv Preprint ArXiv:1903.08114.