Estimating Square Roots in Your Head

I explore an ancient algorithm, sometimes called Heron's method, for estimating square roots without a calculator.

Imagine we want to compute the square root of a number . The basic idea of Heron’s method, named after the mathematician and engineer, Heron of Alexandria, is to find a number that is close to and to then average that with the number , which corrects for the fact that either over- or underestimates .

I like this algorithm because it is simple and works surprisingly well. However, I first learned about it in (Benjamin & Shermer, 2006), which did not provide a particularly deep explanation or analysis for why this method works. The goal of this post is to better understand Heron’s method. How does it work? Why does it work? And how good are the estimates?

The algorithm

Let’s demonstrate the method with an example. Consider computing the square root of . We start by finding a number that forms a perfect square that is close to . Here, let’s pick , since . Then we compute a second number, . In practice, computing in your head may require an approximation. Here, we can compute it exactly as . Then our final guess is the average of these two numbers or

which in our example is

That is pretty good. The relative error is less than ! And furthermore, this is pretty straightforward to do in your head when isn’t too large.

Why does this work?

Intuitively, Heron’s method works because is either an over- or underestimate of . Then is an under- or overestimate, respectively, of . So the average of and should be closer to than either or is.

While this was probably Heron’s reasoning, we can offer a better explanation using calculus: Heron’s method works because we’re performing a second-order Taylor approximation around our initial guess. Put more specifically, we’re making a linear approximation of the nonlinear square root function at the point .

To see this, recall that the general form of a Taylor expansion about a point is

where the notation denotes the -th derivative of . If we define , then

and so the second-order Taylor approximation of is

Now choose , and let denote the Taylor expansion around . Then we have

And this is exactly what we calculated above.

Geometric interpretation

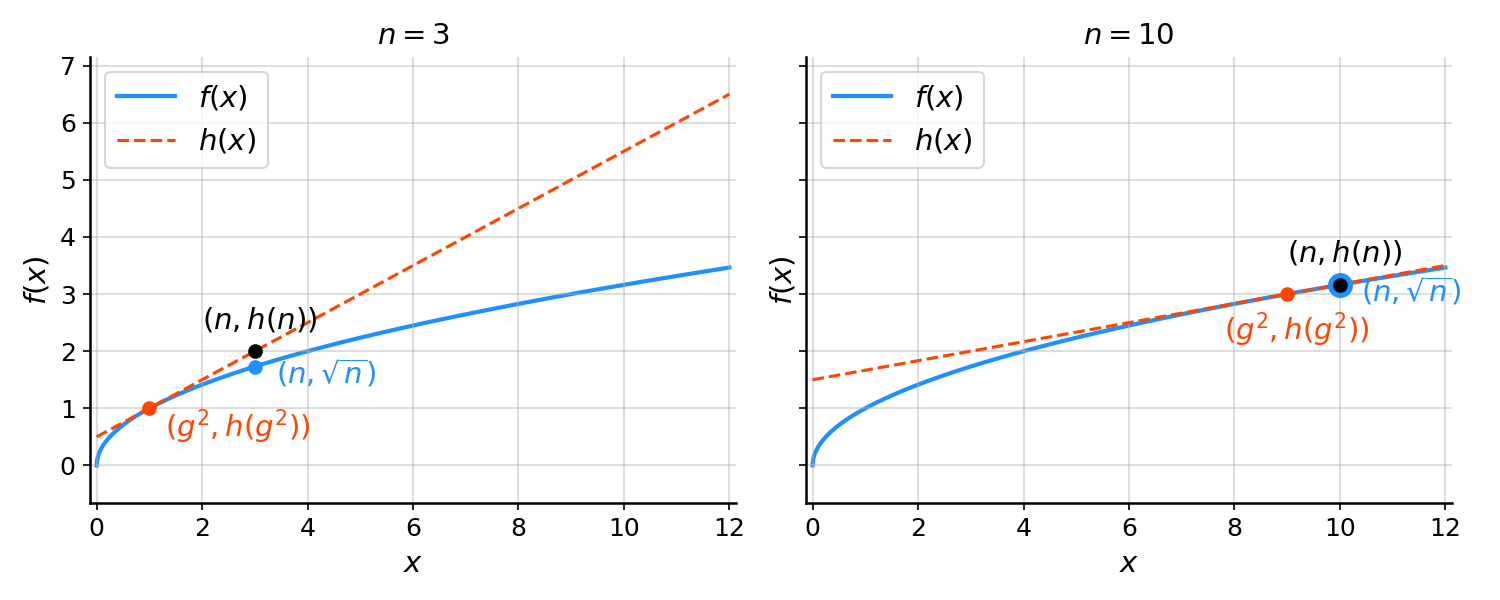

In general, the second second-order Taylor expansion approximates a possibly nonlinear function with a linear function at the point :

Thus, the Taylor approximation represents the tangent line to at the point . We can see this for in Figure . This is a useful visualization because it highlights something interesting: we expect Heron’s approximation to be worse for smaller numbers. That’s because the square root function is “more curved” (speaking loosely) for numbers closer to zero (Figure , left). As the square root function flattens out for larger numbers, the linear approximation improves (Figure , right).

How good is the approximation?

How good is this method? Did we just get lucky with or does Heron’s method typically produce sensible estimates of ?

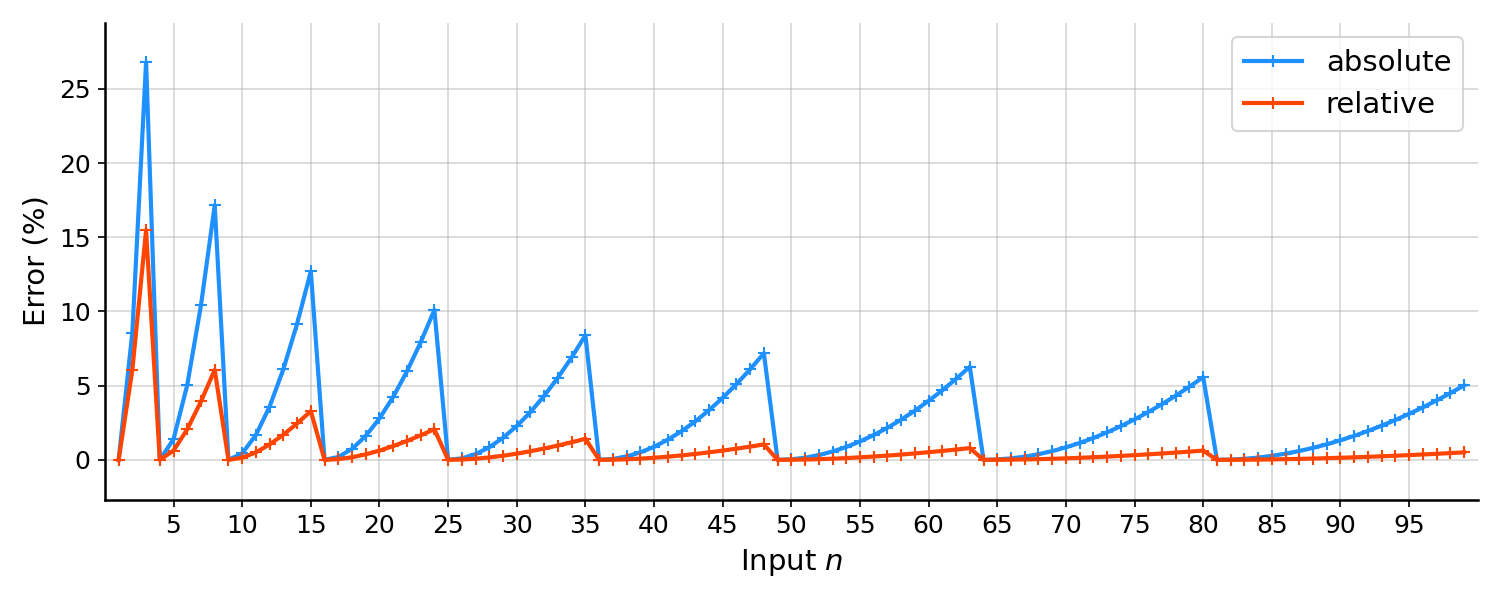

To answer this question, I’m replicating a nice figure from an article from MathemAfrica, in which the author makes a plot with the input number on the -axis and the absolute error

on the -axis (Figure , blue line). (Note that when programming Heron’s

method, we must decide if we want to find by searching numbers greater or

less than ; here, I’ve set as the first integer less than

the square root of , or as g = floor(sqrt(n)).) I like this figure because it captures

two interesting properties of Heron’s method. First, as we discussed above, the

Taylor approximation will typically be worse when is small (when ;

this is not true in general). And second, the error drops to zero on perfect

squares and increases roughly linearly between perfect squares.

The MathemAfrica post focuses on lowering this absolute error by judiciuosly picking the initial guess . This is interesting as analysis. However, in my mind, this is unnecessarily complicated for most practical mental math scenarios, i.e. for quick sanity checking rather than in a demonstration or competition. Why it is overly complicated? Well, the relative error,

rapidly decays to less than a percentage point or so (Figure , red line).

If you’re not using a calculator to compute a square root, you’re probably just getting a rough idea of a problem. And if we actually wanted to lower the absolute error and didn’t care about a human’s mental limits, we should just expand the Taylor approximation to higher orders.

- Benjamin, A., & Shermer, M. (2006). Secrets of mental math: the mathemagician’s guide to lightning calculation and amazing math tricks. Crown.