Standard Errors and Confidence Intervals

How do we know when a parameter estimate from a random sample is significant? I discuss the use of standard errors and confidence intervals to answer this question.

The standard deviation is a measure of the variation or dispersion of data, how spread out the values are. The standard error is the standard deviation of the sampling distribution. However, this does not mean that the standard error is the empirical standard deviation.1 Since the sampling distribution of a statistic is the distribution of that statistic derived after repeated trials, the standard error is a measure of the variation in these samples. The more samples one draws, the bigger is, the smaller the standard error should be. Intuitively, the standard error answers the question: what’s the accuracy of a given statistic that we are estimating through repeated trials?

While the standard error can be estimated for other statistics, let’s focus on the mean or the standard error of the mean. Let denote a random sample where are independent and identically distributed (i.i.d.) with population variance . Because of this i.i.d. assumption, the variance of the sample mean is:

If the population variance is unknown, we can use the sample variance to approximate the standard error:

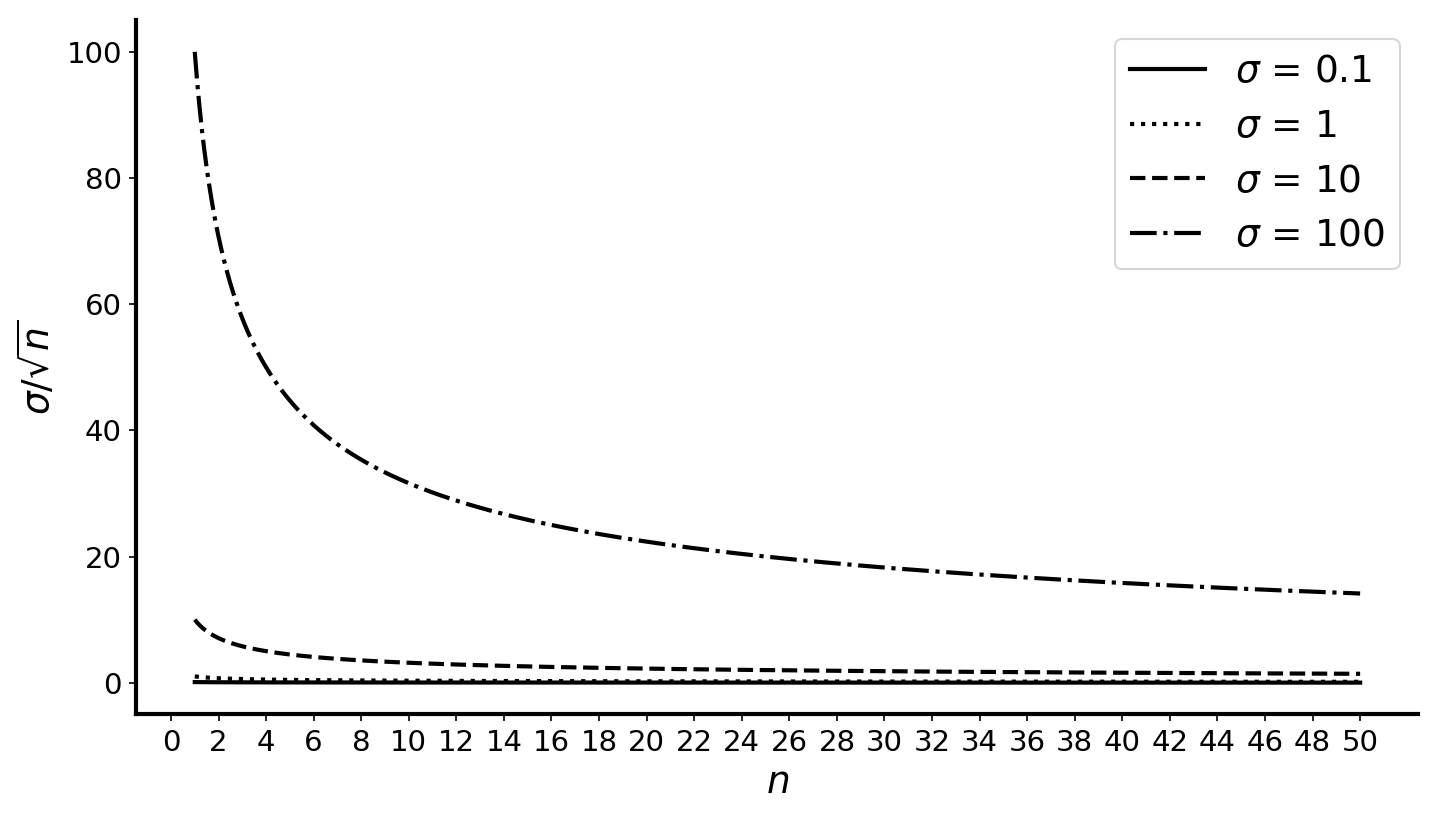

To see the effect of dividing by , consider Figure , which compares the standard error as a function of . To reduce a given standard error by half, we need four times the number of samples:

For example, when and , we have a standard error of . To reduce this standard error to , we need samples.

Put differently, think about what would happen if we didn’t divide our estimate by . In this case, we would be just be estimating the standard deviation. This may be a nice thing to do—maybe even what we want to do—but it’s not estimating the standard deviation of the mean itself.

Confidence intervals

Standard errors are related to confidence intervals. A confidence interval specifies a range of plausible values for a statistic. A confidence interval has an associated confidence level. Ideally, we want both small ranges and higher confidence levels. Note that confidence intervals are random, since they are themselves functions of the random variable .

This is a nuanced topic with a lot of common statistical misconceptions. Therefore, let’s stick to just a single simple example that illustrates this relationship. Please consult a textbook for a more thorough treatment. Imagine we want to estimate the population mean parameter of a random variable, which we assume is normally distributed. We can compute a standard score as

There are multiple definitions of standard score; this version tells us the difference between the sample mean and the population mean ; this is why we normalize by the standard error rather than population variance. The units are the units of the standard error.

We can therefore compute numbers and such that

where is our confidence level. If we set , then we are computing the probability that the standard score is between and with probability. We can compute using the cumulative distribution function of the standard normal distribution, since has been normalized:

We’re just backing out the value given a fixed confidence level specified by . In other words, we decide how confident we want to be, and then estimate how big our interval must be for that desired confidence level. We can now solve for a confidence interval around the true population mean; it’s a function of our sample mean and standard score:

As we can see, if we compute our sample mean and then add and subtract roughly two times the standard score, we get confidence intervals that represent the range of plausible values that the true mean parameter is in, with a confidence level of .

Example

As an example, imagine I wanted to compare two randomized trials. A common example is a medical trial, but in machine learning research, we might want to compare two randomized algorithms, our method and a baseline. If we simply run both algorithms a few times and compare a mean metric, for example the mean accuracy, we may not be able to say anything about our model’s performance relative to the baseline. Intuitively, we may not have enough precision about the metric; and what we want to do is to increase to increase our confidence in the estimate.

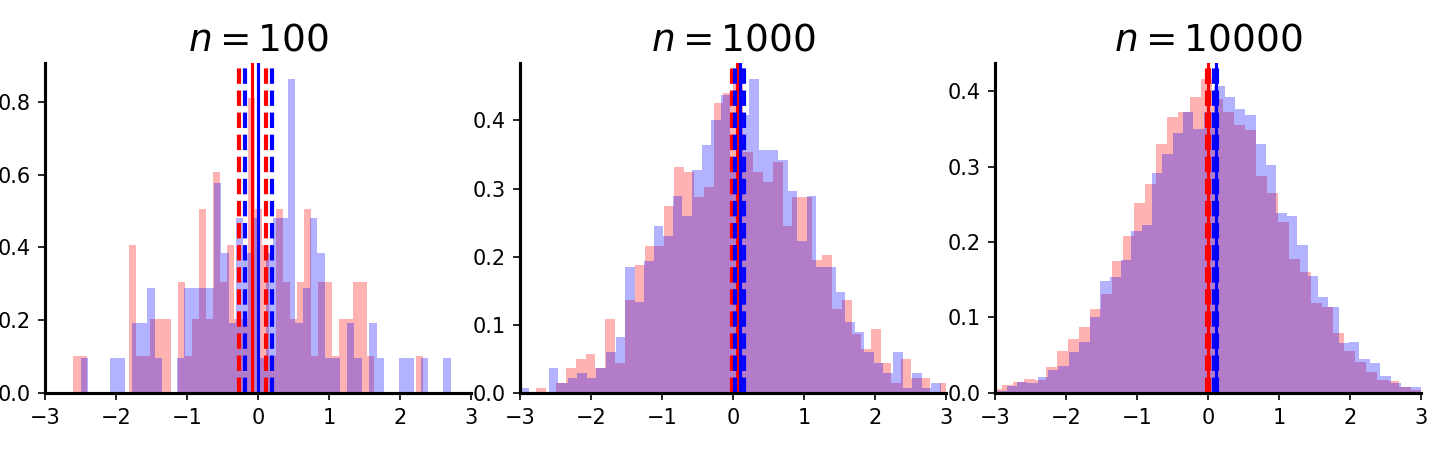

Consider Figure . To generate this plot, I drew realizations from two normal distributions, and , for increasing values of . I then computed the standard score (Eq. ) and then plotted the confidence interval around the sample mean (Eq. ). As we can see, it is not possible to distinguish between mean estimates of the random samples, even when because the confidence intervals overlap. However, when , we have a statistically significant result.

Conclusion

Statistical significance is a complicated topic, and I’m by no means an expert. However, just the level of background in this post demonstrates why it’s such an important topic. In my numerical experiments, I could simply increase to get confidence intervals that I desired. However, if I were running a clinical trial, I may have to fix in advance. In many machine learning papers, researchers will report the mean and standard deviation, without, I suspect, realizing that the standard deviation is simply the standard deviation of the sample (e.g. the randomized trials), not the standard deviation of the estimated mean (e.g. the average accuracy). I refer the reader again to the footnote.

-

Can you tell that’s what I thought this meant? ↩