An Example of Probabilistic Machine Learning

Probabilistic machine learning is a useful framework for handling uncertainty and modeling generative processes. I explore this approach by comparing two models, one with and one without a clear probabilistic interpretation.

A probabilistic approach

My first course in machine learning was Andrew Ng’s Coursera course. This class, like many other introductory resources, primarily focused on machine learning as prediction by function approximation. In this context, the goal of learning, whether we use logistic regression or neural networks, is to find parameters for a function such that the difference or loss between the predicted values and target labels is minimized:

But there is another way to view our data . Rather than view our data “statically”, we can view it as random observations drawn i.i.d. from an underlying process with a true distribution . In other words, we want to find statistical parameters such that:

What this framing suggests is that learning is really the task of modeling this underlying process by inferring parameters . This inference procedure is formalized using Bayes’ theorem (I have labeled each term with its standard name in Bayesian inference):

The prior is so-named because we specify a distribution over the parameters, thus capturing both our uncertainty and prior knowledge about the parameters. The evidence is computed by marginalizing over the parameters. This framing has many benefits, but I will focus on two: handling uncertainty and modeling generative processes.

Handling uncertainty

Without probability theory, the best function parameters given our data are fixed and do not encode our uncertainty about the parameters. But with a probabilistic approach, modeling our data given this uncertainty has a natural mathematical solution, namely marginalization:

This captures the idea that we model the data by considering all possible parameter values. Note that a central challenge for Bayesian researchers is computing this marginal distribution because it often requires either high-dimensional integration, which is computationally hard in general, or has no known analytic solution. While other researchers might focus on optimization of model parameters, Bayesian researchers often focus on techniques like MCMC and variational inference for efficiently approximating integrals.

Modeling generative processes

Every well-defined probabilistic model is also a generative model because we have learned a density. First, we sample parameters from our prior and then use our learned likelihood to condition on the parameters. Generating new “fantasy” data helps us to inspect what the model has learned about our observations. We’ll see a concrete example of this later.

Two clustering algorithms: -means and a GMM

There’s a lot to unpack in my brief introduction, and I want to elaborate on the main points by comparing a non-probabilistic model, -means, with a probabilistic model, a Gaussian mixture model. These models perform the same task of clustering, and it will be useful to compare them since only one has a probabilistic interpretation.

K-means clustering

-means clustering is an unsupervised learning algorithm for partitioning data points, each with dimensionality , into groups or clusters. Each cluster is represented by a single parameter , which is a -vector representing the cluster’s mean. Given data , the goal is to find cluster assignments such that the total intra-cluster squared Euclidean distance from the cluster mean is minimized. (You can also think of this as finding clusters with low variance.)

To express this as a loss, let’s introduce a one-hot vector which denotes the cluster assignment for the -th data point. Then the objective to minimize is:

Our goal is to learn the mean parameters and cluster assignments . The algorithm for fitting this model is an iterative procedure:

- Initialize the means somehow.

- Repeat until convergence:

- Assign each data point to its closest mean. Formally, minimize w.r.t keeping fixed.

- Update the means. Formally, minimize w.r.t keeping fixed.

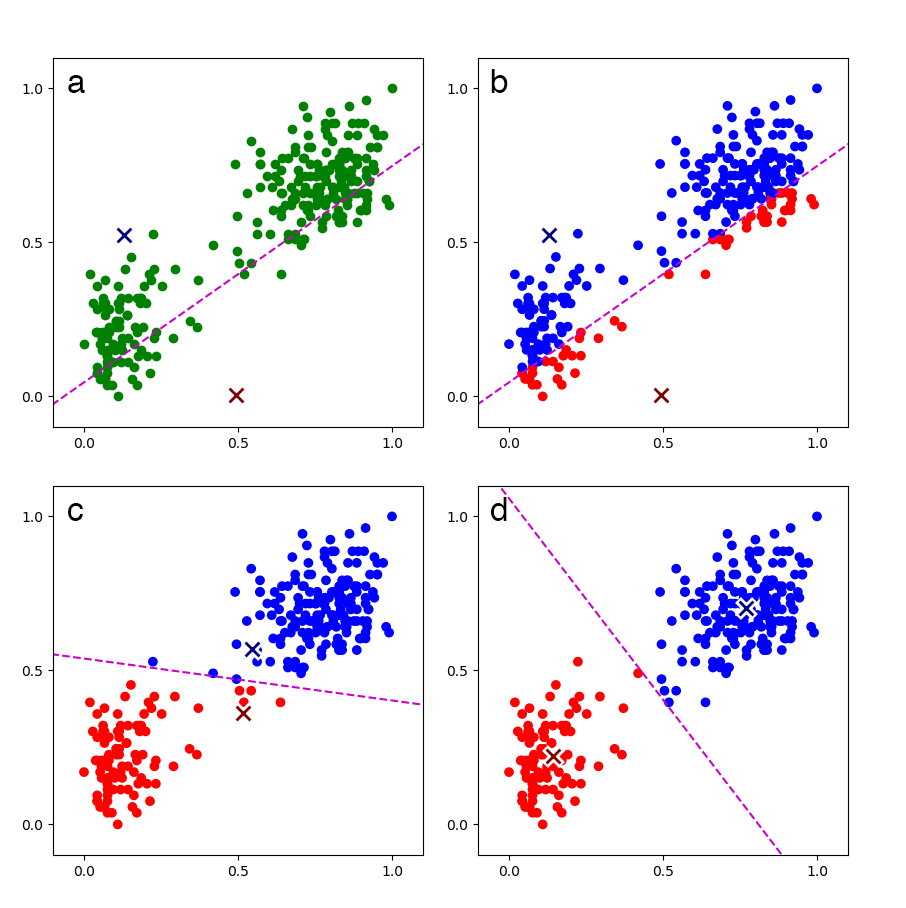

See Bishop, section 9.1 (Bishop, 2006) for a complete explanation and derivation. For commented code with references to Bishop, see my GitHub repository. At a high level, the algorithm is fairly straightforward and easy to visualize (see Figure 1).

Convergence can be assessed by monitoring the loss after each iteration. Once the loss has converged, we have a -parameter model that allows us to cluster or label new, unseen data. For example, once the model is trained on Old Faithful data as in Figure 1, we can assign new Old Faithful data to one of the two clusters.

Gaussian mixture model

Note that nothing about -means is probabilistic. There are no statistical parameters. There is no learned density. We don’t handle any uncertainty about our means , and we cannot generate new data from this model.

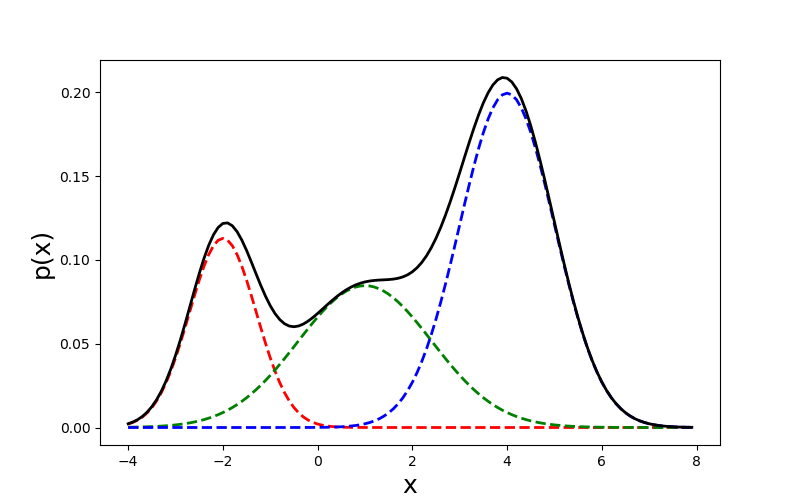

To approach the problem from a probabilistic perspective, we could use a mixture model. A mixture model represents a density as a mixture of weighted densities. For example, a Gaussian mixture model or GMM is a mixture model in which the component densities are all Gaussian. This idea is easy to visualize with univariate Gaussians (Figure 2):

We can formalize a Gaussian mixture model as the sum of weighted Gaussian densities, called components, each with its own mean , covariance , and mixture weight :

For example, the mixture model in Figure 2 can be written as:

Since and , this implies . Furthermore, we can see that the mixture weights must sum to :

Gaussian mixture models can be fit, i.e. we can learn the most likely parameters , , and given our data, in a variety of ways. Perhaps the most common method is expectation—maximization or the EM algorithm. At a high-level, the EM algorithm is similar to the -means algorithm. It iteratively finds the most likely parameters given the data, runs until convergence, and depends on parameter initialization.

Once again, see Bishop, section 9.2 (Bishop, 2006) for an explanation and derivation, but see the Mathematics for Machine Learning by Deisenroth et al. (Deisenroth et al., 2018) for an extremely thorough derivation with proofs for the EM updates. For commented code with references to Bishop, see my GitHub repository.

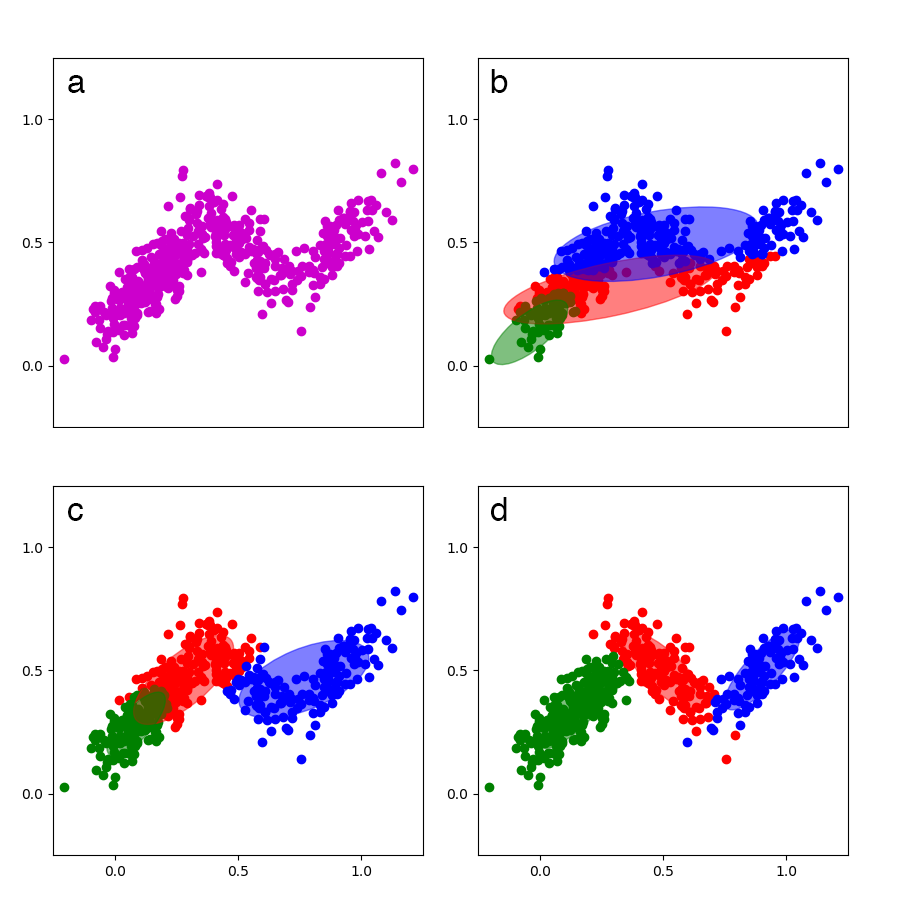

To help visualize GMMs, I created a small () synthetic dataset—I am not using the Old Faithful dataset here because I want to demonstrate how GMMs handle overlapping densities—with three multivariate Gaussian densities, trained a GMM on the data, and then visualized the model at various iterations (Figure 3).

Comparing the models

Now that we have a shared understanding of and notation for -means and GMMs, let’s compare them. Both models are trained using iterative algorithms to find the best parameters to cluster the data. Both models use a small number of parameters to model the data; if -means uses parameters, GMMs use parameters (means, variances, and weights). But a GMM is a probabilistic model, and I argued that two major benefits are (1) handling uncertainty and (2) modeling generative processes. Let’s re-consider both.

Handling uncertainty

With -means, the best parameters do not capture any of our uncertainty about what the parameters should or could be. With a GMM, we handle this uncertainty by marginalization. To see this, let’s construct a GMM as a latent variable model in which each data point is generated by only one component. Using an indicator variable to indicate an assignment to the -th cluster, we can write the conditional probability given as:

And if we let be a one hot vector of indicator variables, then the prior on and the conditional distribution are:

Note that if we marginalize out the latent variable, we get the same GMM model we specified earlier:

This marginalization over the latent variables is the mathematical formulation of the idea that we’re considering all possible values of .

Modeling generative processes

By sampling from a probabilistic or generative model, we can enrich our understanding of what the model learned. The latent variable construction elucidates how we sample from a GMM. Since depends on the mixture weights , we first sample cluster assignments from a categorical distribution with probabilities that are the learned mixture weights:

This ensures that the number of fantasy data points for each cluster is proportional to what we saw in our observed data. Next, for each sampled cluster assignment , we sample from a multivariate Gaussian with our learned parameters and :

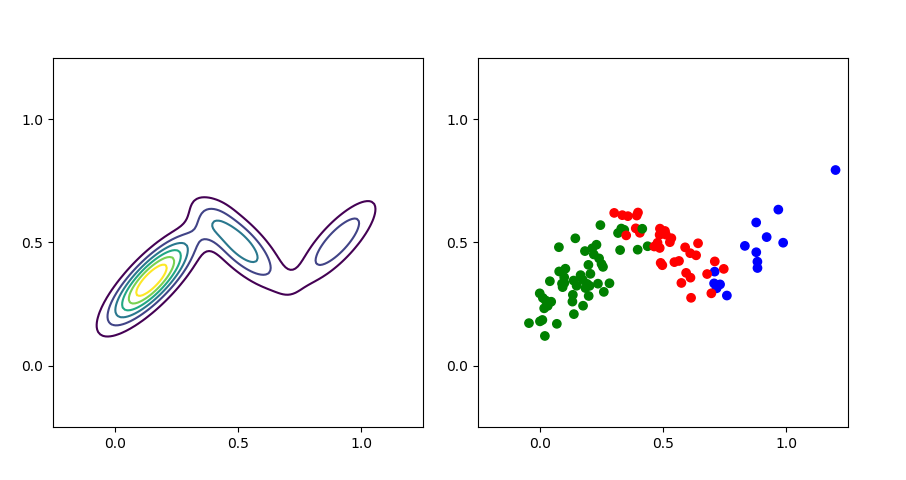

I have visualized the learned density and some fantasy samples using this procedure in Figure 4.

In my mind, this visualization (Figure 4) really emphasizes the mixture weights. It visualizes the mathematical fact that the blue density has the least amount of mass (Figure 4, left) and that as a consequence it is the least likely to be sampled from (Figure 4, right). We can inspect the model’s learned mixture weights and see that this is true:

The actual mixture weights for the synthetic dataset are , , and respectively.

Conclusion

There are many other benefits to probabilistic machine learning that I did not have time to mention. One that interests me greatly is compositionality through graphical models (Wainwright & Jordan, 2008). Because probabilistic models are built on probability theory, it is often much easier to understand their behavior and theoretical properties as they are combined to form larger systems. Compare this compositionality with deep neural networks. We might be able to attach two convolutional neural networks to build a multimodal system, but it is unclear how to interpret such a model, much less what its theoretical guarantees are. Probabilistic machine learning is a useful tool for composing models into complex but interpretable systems.

It took me a while to really internalize the benefits of probabilistic machine learning, and I hope I conveyed the utility of this approach by example. For additional reading from an expert on this topic, I would highly recommend Zoubin Ghahramani’s Nature review article (Ghahramani, 2015).

- Bishop, C. M. (2006). Pattern Recognition and Machine Learning.

- Deisenroth, M., Faisal, A. A., & Ong, C. S. (2018). Mathematics for Machine Learning. In URL:https://mml-book.github.io/.

- Wainwright, M. J., & Jordan, M. I. (2008). Graphical models, exponential families, and variational inference. Foundations and Trends® in Machine Learning, 1(1–2), 1–305.

- Ghahramani, Z. (2015). Probabilistic machine learning and artificial intelligence. Nature, 521(7553), 452.